A collaborative extended reality tool to examine tumour evolution (Phase II)

Rose McColl, Sina Masoud-Ansari, Technical Specialists, Centre for eResearch

Background and objectives

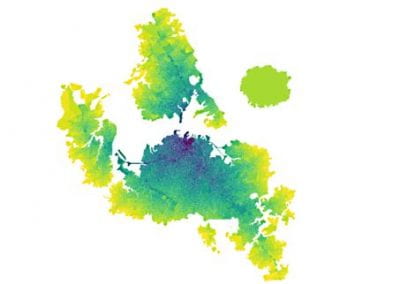

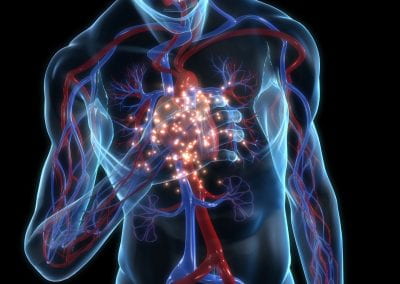

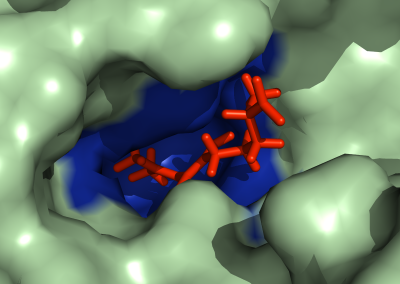

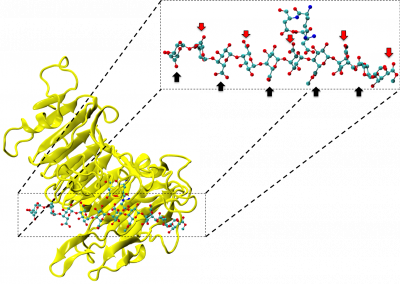

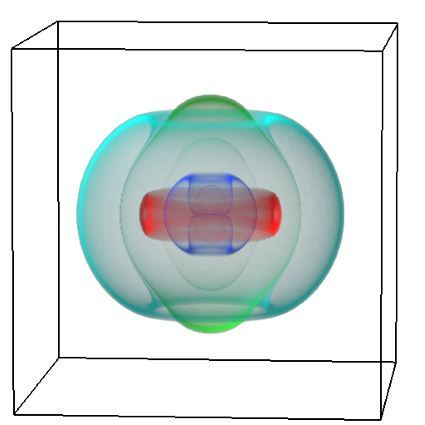

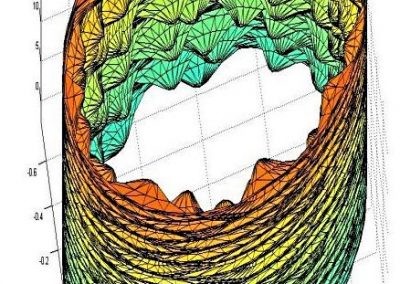

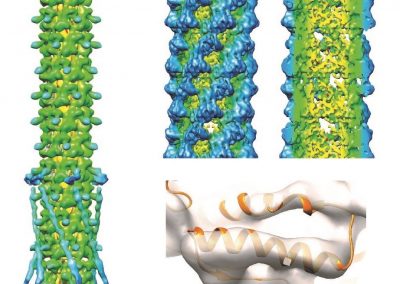

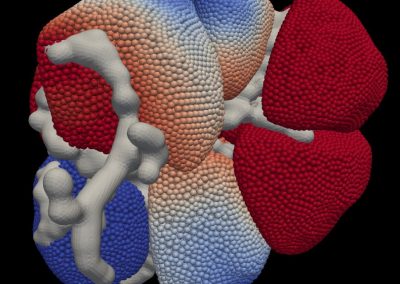

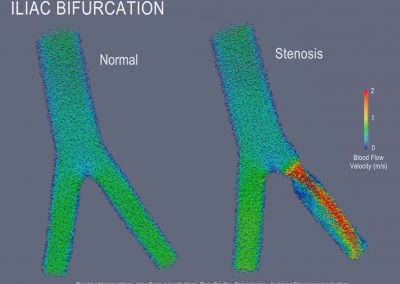

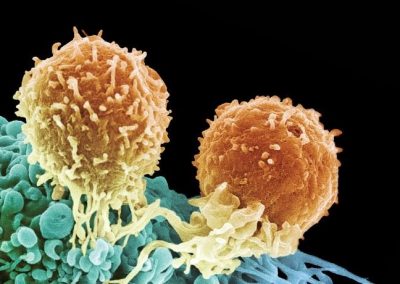

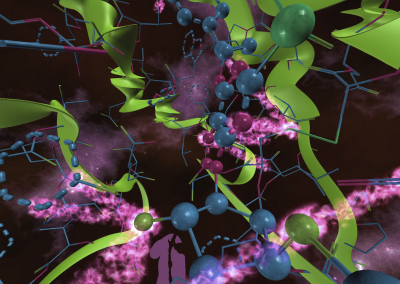

The cognitive challenge of conceptualising complex molecular tumour changes in 3 dimensional space and across a 4th dimension of time pushes the capability of the human brain. This project aims to develop an immersive extended reality (XR) tool to interface human problem solving ability with genomic datasets, to transform the way we think about cancer evolution and therefore cancer treatment. The XR application will be used to facilitate generation of new hypotheses of how cancer evolves, by combining detailed genomic, pathological, spatial and temporal data from a single patient with cancer. This project is centred around a patient with a primary lung neuroendocrine tumour and 88 metastases, who requested and consented to a rapid autopsy following comprehensive ethical consultation with the patient and their extended family. Importantly, we work in an ongoing partnership with the patient’s whānau who are wholly supportive of the project.

The process and collaboration

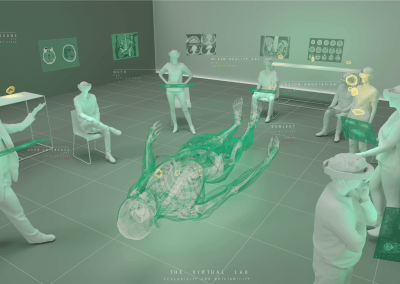

The project brings together four diverse disciplines; Architecture (spatial resolution, human interface, social interaction, visual design), Medicine (pathology, radiology, clinical context), Molecular sciences (genomics, bioinformatics and cancer biology) and eResearch (coding, data manipulation, solution architecture). This is a unique collaboration with each discipline bringing their own perspective and set of skills to the project. The project recognises the value of collaboration in science, putting interaction at the centre of finding solutions to complex problems.

The project commenced in August 2020 and will run for two years. The project team¹ has been meeting fortnightly throughout the project to date, to work together towards designing and building the XR application and presentation space. In the first quarter of Year 1, we completed a formal qualitative review of the Phase I model. Participants were grouped into multidisciplinary pairs consisting of a cancer clinician and a scientist, to undertake evaluation of the augmented reality model under multi-user conditions. Feedback from this review contributed to the redesign of the application for Phase II.

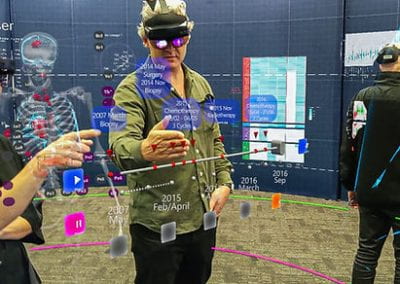

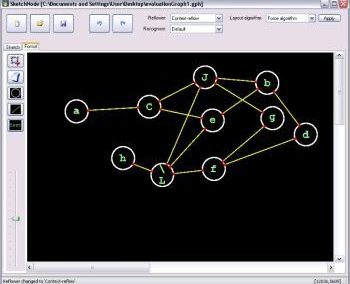

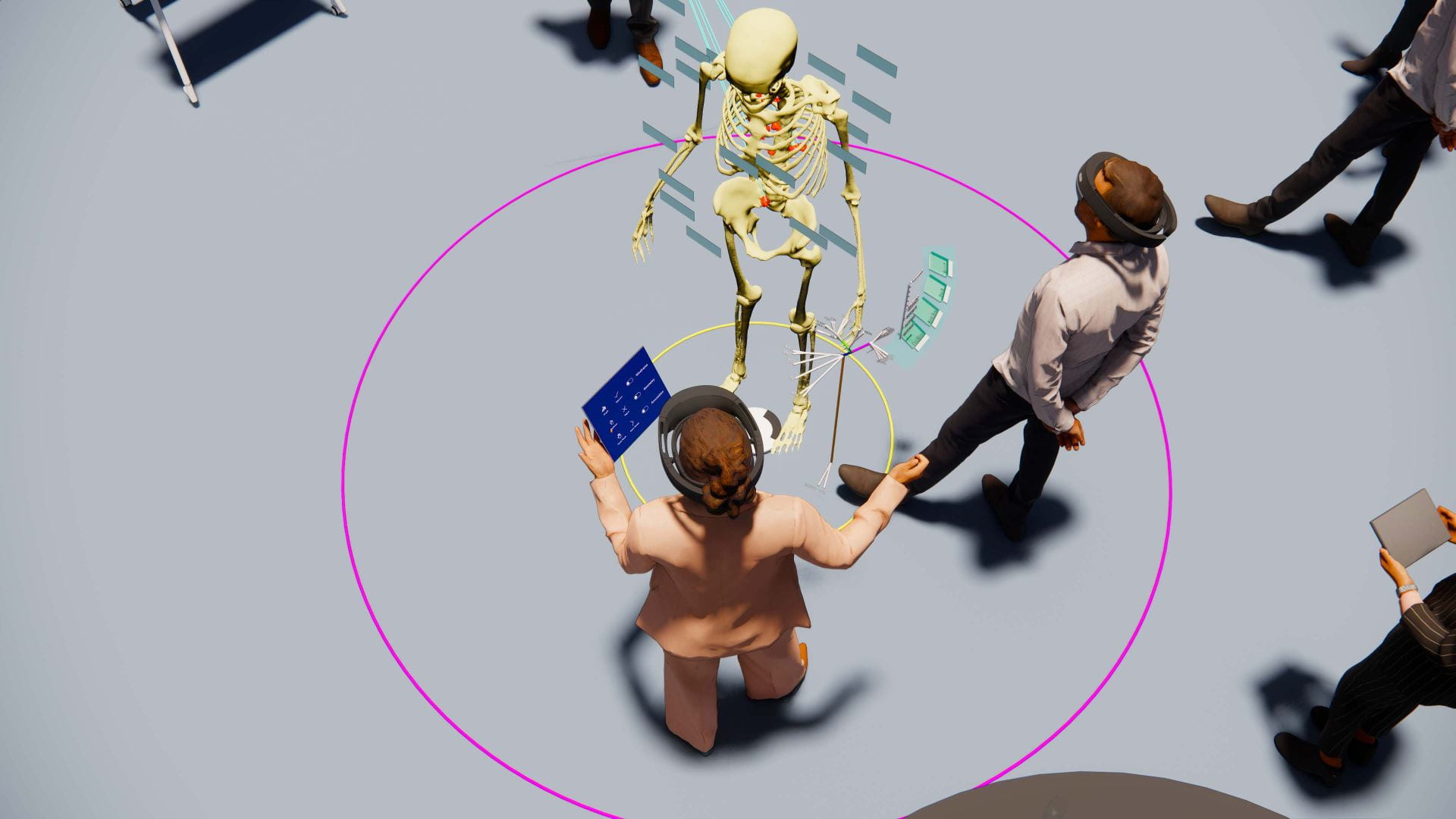

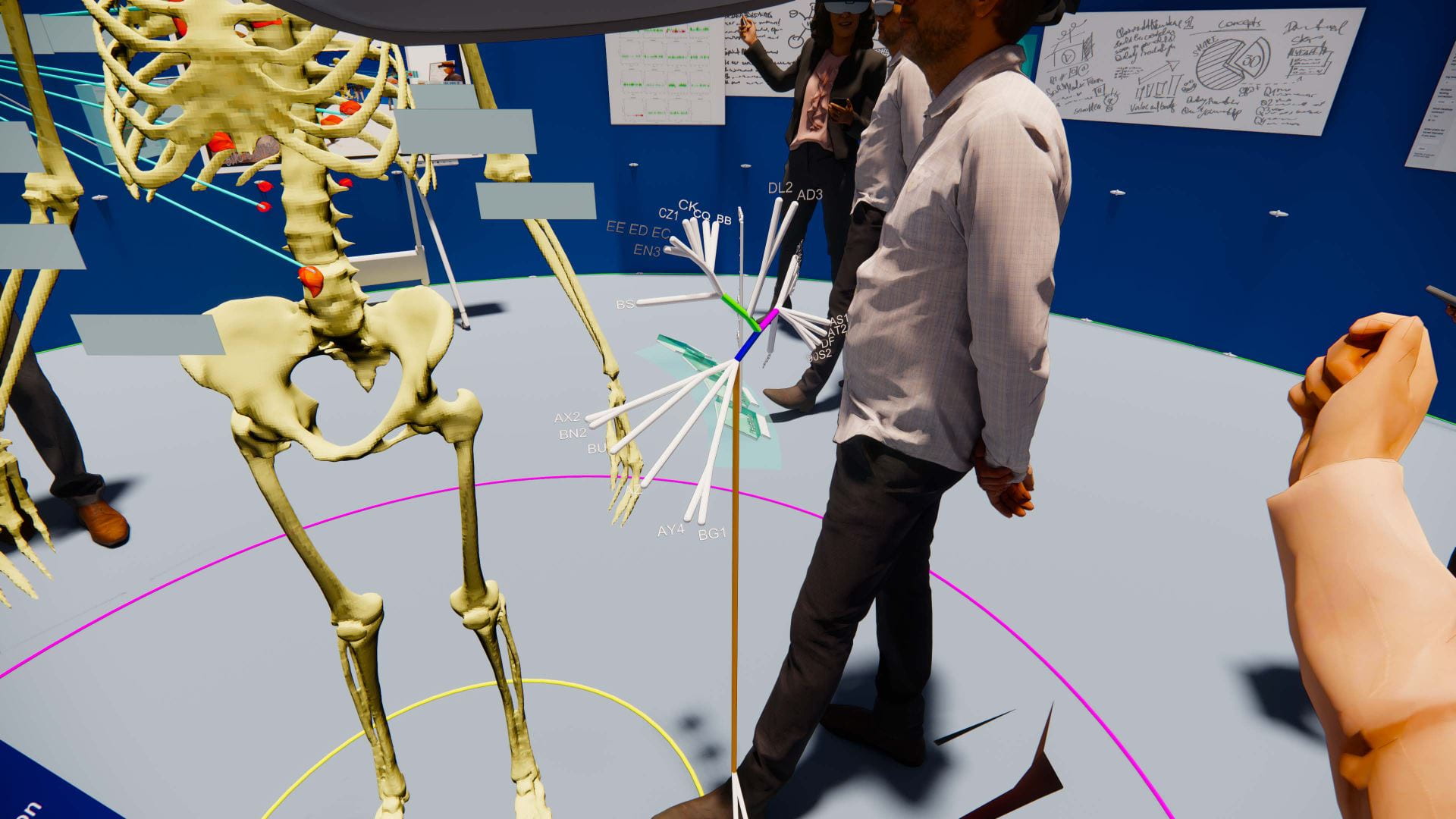

Following on from the qualitative review, Yinan Liu (Architecture and Planning arc/sec Lab), Rose McColl and Sina Ansari-Masoud (Centre for eResearch) have developed a prototype XR application for Microsoft

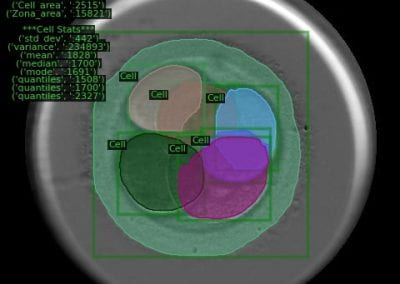

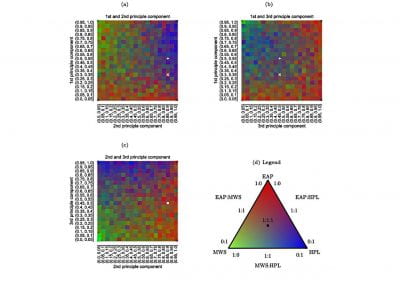

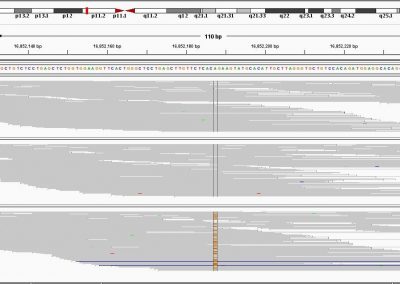

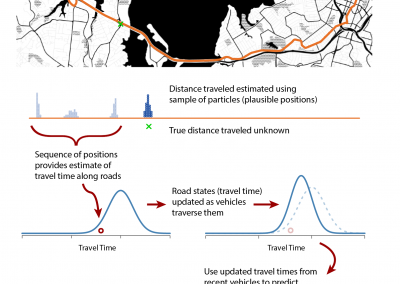

For presentation of detailed genomic data, Dr Daniel Hurley (Medical and Health Sciences/NETwork!²) has developed an RShiny web application which provides a way to view specific genomic information for each of the patient tumour samples, and provides hyperlinks to traditional online genomics resources and databases for quick reference. The web application is intended to be viewed on a tablet device at the same time that users are also immersed in the XR application. The web application is linked with the XR application so users can, for example, select a mutation they are interested in on the tablet, and it will then highlight the samples with that mutation in XR, enabling linkage between traditional 2D data and the virtual 3D space.

Why Hololens 2?

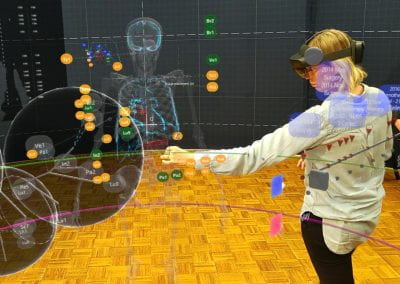

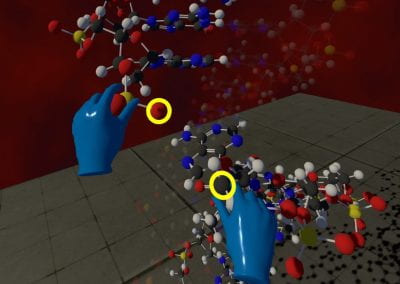

Hololens 2 has improved technical, visual, and functional aspects than the Microsoft HoloLens 1 headsets which were used in Phase I of the project. Specific challenges that participants raised in the qualitative review of the Phase I prototype included complaints of headset pain and narrow field of view, both of which are improved with the new HoloLens 2 hardware. The HoloLens 2 headsets are significantly more comfortable to wear than the original HoloLens, they have an improved field of view, improved computational power and graphics, and much richer and naturalistic gestures and interactions. Resizing, scaling, grabbing, moving and holding the digital objects is done in a way that is very close to interactions with physical objects. The Hololens 2 achieves this natural interaction by using hand tracking. It also tracks your eye movements so you can interact with objects just by looking at them, and it has voice recognition so voice commands can also be used.

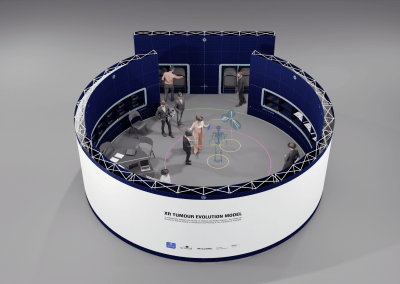

Following the success of the 2018 Phase-I project, the Health Research Council of New Zealand provided funding for Phase-II (HRC Explorer Grant). The primary goal for Phase-II is to improve the prototype XR application using the latest platform and devices, adding richer genomic information, more naturalistic user interactions, and integration with traditional 2D devices, as well as developing a physical presentation space that will allow this novel XR tool to be evaluated by members of the medical research community in a formal qualitative research exercise, and exhibited to the wider public in an educational context. While this is a research tool in the first instance, it may form a blueprint for transformative clinical tools of the future for use by health professionals, patients and the public.

Hololens 2 headsets using Unity 3D game engine and Microsoft Mixed Reality Toolkit (MRTK) (see how it works in YouTube). The application uses Photon Unity Networking (PUN) to enable real time multiplayer capabilities, and Azure Spatial Anchors for locating and aligning virtual objects in physical space, so that all users see the objects in the same position. The combination of these technologies is key to achieving the goal of creating a multi-user collaborative experience.

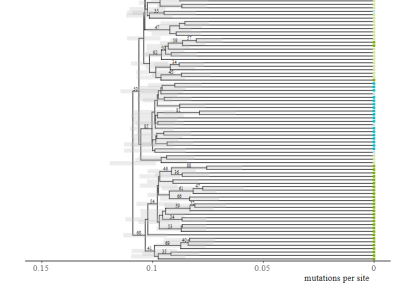

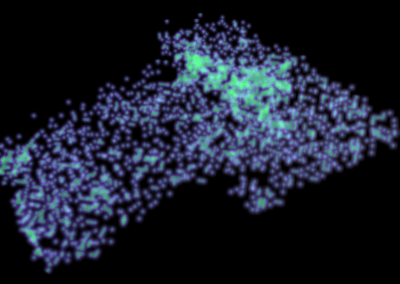

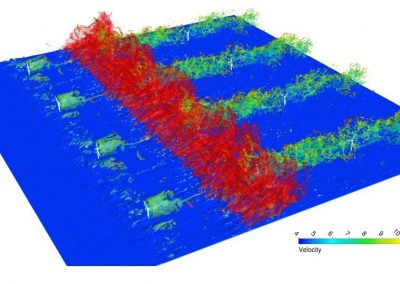

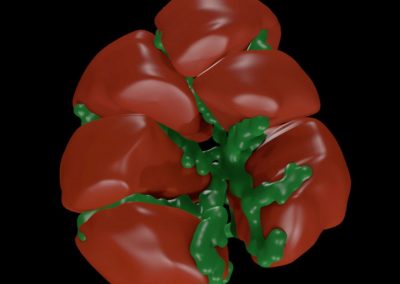

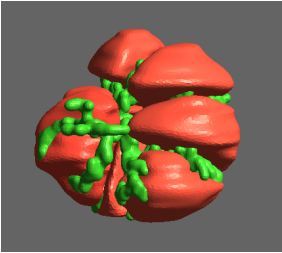

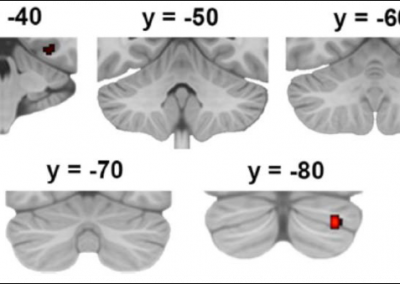

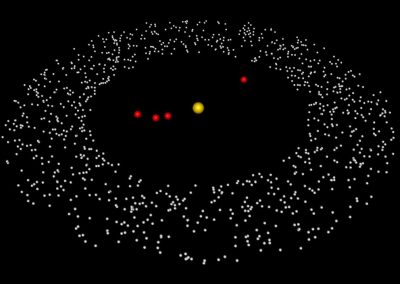

The application enables users to visualise a life-size model of the patient’s skeleton in three dimensions and the location of tumours in relation to the skeleton and other anatomical features (e.g. organs). Locations of sampling sites are indicated and a genomic evolutionary tree shows the development of mutations in the tumours over time. Change and spread of the tumours in the patient’s body is shown using a timescale which users can interact with. The models of the patient’s bone structure, tumours and organs were constructed from CT scans (read more about the method used here) which were annotated by consultant radiologist Dr Jane Reeve (Auckland City Hospital). Most of this work was done in Phase I of the project, but Dr Reeve has continued to provide her expertise and time in Phase II to add organ annotations and refine the positioning of the 3D models.

User experience tests

We have executed several demonstrations of the prototype in the physical presentation stand. Firstly, a demonstration to the family of the patient whom this project is centred around was performed. Our application is built using the clinical information of a single patient, and the engagement and support of the patient’s family is of utmost importance. Secondly, a structured session with collaborating colleagues from both scientific and medical backgrounds was performed to gather observations and feedback around the user experience and effectiveness of the application. The method used was Moderated User Testing with a semi-structured follow-up interview. Participants made their way through a series of tasks and guided discussion points, and observations were recorded by project team members. This demonstrated aspects of the design that were effective, such as the ability to see tumours appearing across time, with overlaid tumour phylogenetic information. In addition, other areas were highlighted where the user experience could be improved such as the placement of information and the types of gestures integrated. This was useful feedback as we look to design a cohesive application/physical space that promotes collaboration and deep investigation of cancer evolution.

Exhibitions

The XR application was exhibited at the ARS Electronica Festival Aotearoa 2021, an Art, technology and society conference from 8th-12th September 2021 at Victoria University Wellington. Due to COVID-19, the exhibition was moved to an online format, and guests could experience the exhibits in a virtual 3D gallery and through a series of online events. Further events are planned including a special showcase event planned for the first quarter of 2022, which will include international invited experts, and an international exhibition in the second half of 2022 if COVID-19 travel restrictions allow.

Reference

- The project team: Dr Ben Lawrence (Principal Investigator), Prof Cristin Print, Tamsin Robb, Braden Woodhouse, Dr Daniel Hurley, Faculty of Medical and Health Sciences; Dr Michael Davis, Assoc Prof Uwe Rieger, Yinan Liu, Denice Belsten, School of Architecture and Planning; Dr Jane Reeve, Auckland City Hospital; Rose McColl, Sina Masoud-Ansari, Centre for eResearch.

- NETwork! is a New Zealand wide alliance of cancer clinicians and scientists working together in a national framework to manage and study neuroendocrine cancers. See https://www.network.ac.nz/ neuroendocrine cancers. See https://www.network.ac.nz.

See more case study projects

Our Voices: using innovative techniques to collect, analyse and amplify the lived experiences of young people in Aotearoa

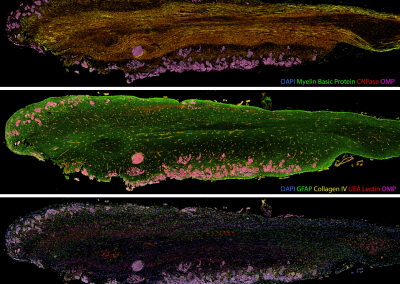

Painting the brain: multiplexed tissue labelling of human brain tissue to facilitate discoveries in neuroanatomy

Detecting anomalous matches in professional sports: a novel approach using advanced anomaly detection techniques

Benefits of linking routine medical records to the GUiNZ longitudinal birth cohort: Childhood injury predictors

Using a virtual machine-based machine learning algorithm to obtain comprehensive behavioural information in an in vivo Alzheimer’s disease model

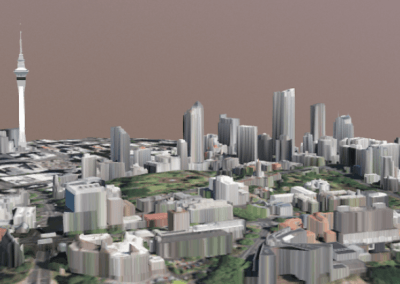

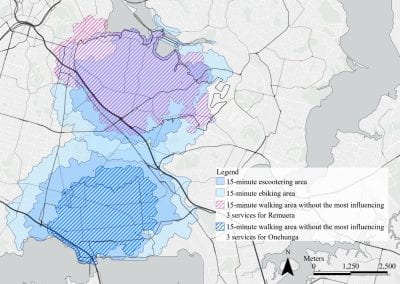

Mapping livability: the “15-minute city” concept for car-dependent districts in Auckland, New Zealand

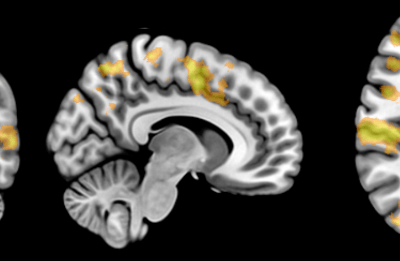

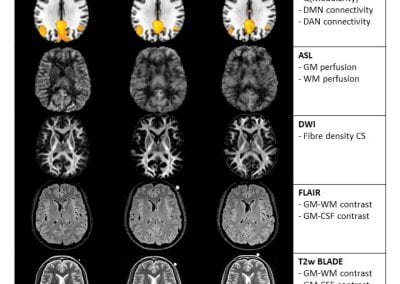

Travelling Heads – Measuring Reproducibility and Repeatability of Magnetic Resonance Imaging in Dementia

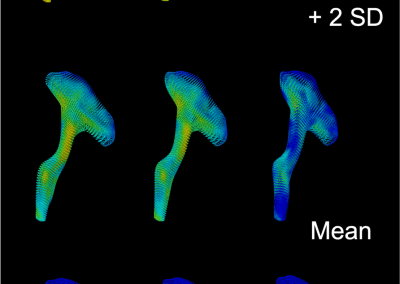

Novel Subject-Specific Method of Visualising Group Differences from Multiple DTI Metrics without Averaging

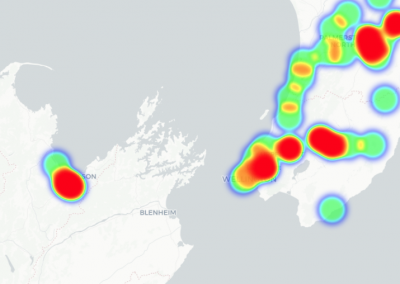

Re-assess urban spaces under COVID-19 impact: sensing Auckland social ‘hotspots’ with mobile location data

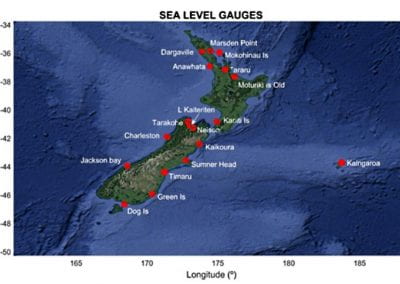

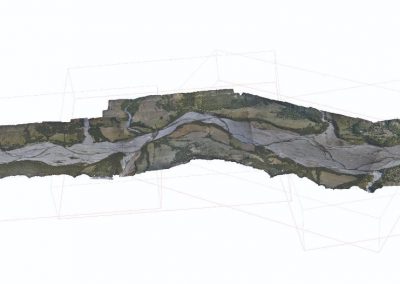

Aotearoa New Zealand’s changing coastline – Resilience to Nature’s Challenges (National Science Challenge)

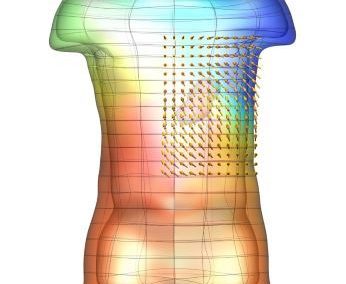

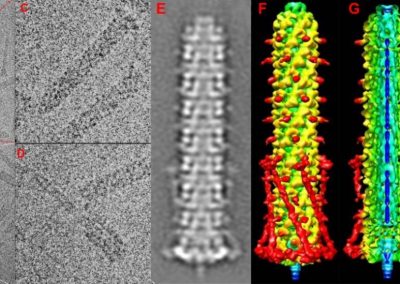

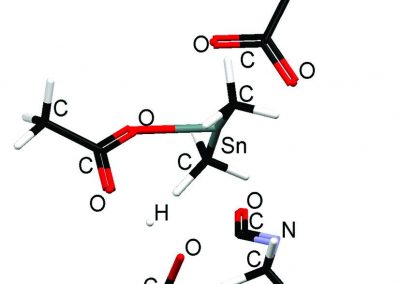

Proteins under a computational microscope: designing in-silico strategies to understand and develop molecular functionalities in Life Sciences and Engineering

Coastal image classification and nalysis based on convolutional neural betworks and pattern recognition

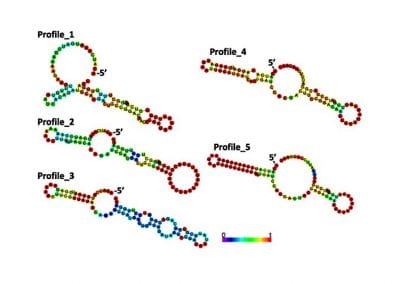

Determinants of translation efficiency in the evolutionarily-divergent protist Trichomonas vaginalis

Measuring impact of entrepreneurship activities on students’ mindset, capabilities and entrepreneurial intentions

Using Zebra Finch data and deep learning classification to identify individual bird calls from audio recordings

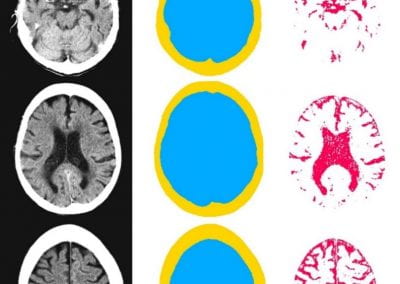

Automated measurement of intracranial cerebrospinal fluid volume and outcome after endovascular thrombectomy for ischemic stroke

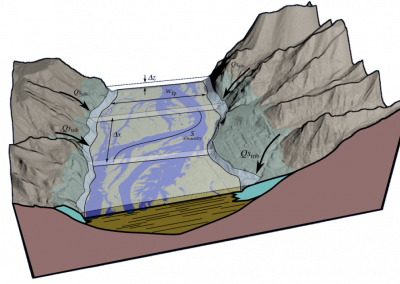

Using simple models to explore complex dynamics: A case study of macomona liliana (wedge-shell) and nutrient variations

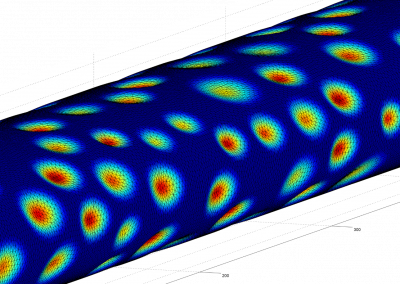

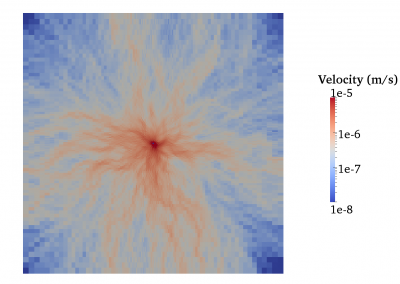

Fully coupled thermo-hydro-mechanical modelling of permeability enhancement by the finite element method

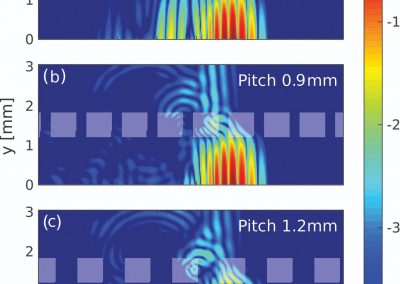

Modelling dual reflux pressure swing adsorption (DR-PSA) units for gas separation in natural gas processing

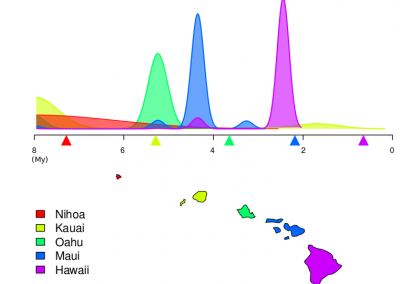

Molecular phylogenetics uses genetic data to reconstruct the evolutionary history of individuals, populations or species

Wandering around the molecular landscape: embracing virtual reality as a research showcasing outreach and teaching tool