An investigation into Leap Motion device for “gesture-as-sign”

Lucy Boermans, Elam School of Fine Arts ; Andrew Leathwick, Centre for eResearch

Introduction: relational shifts in society

The rise of social media and digital communication has seen the everyday reality humans live in forever changed; no longer do we live in a purely physical work, but instead ‘multi-dimensional being’ has become a daily reality for all of us.

Artist Lucinda Boermans’ work examines and questions the sociological shift in primary communication from the physical (face-to-face) to the virtual (text-messaging), with the aim of reaching a clearer understanding of this shift, and in turn, its impact upon relational being.

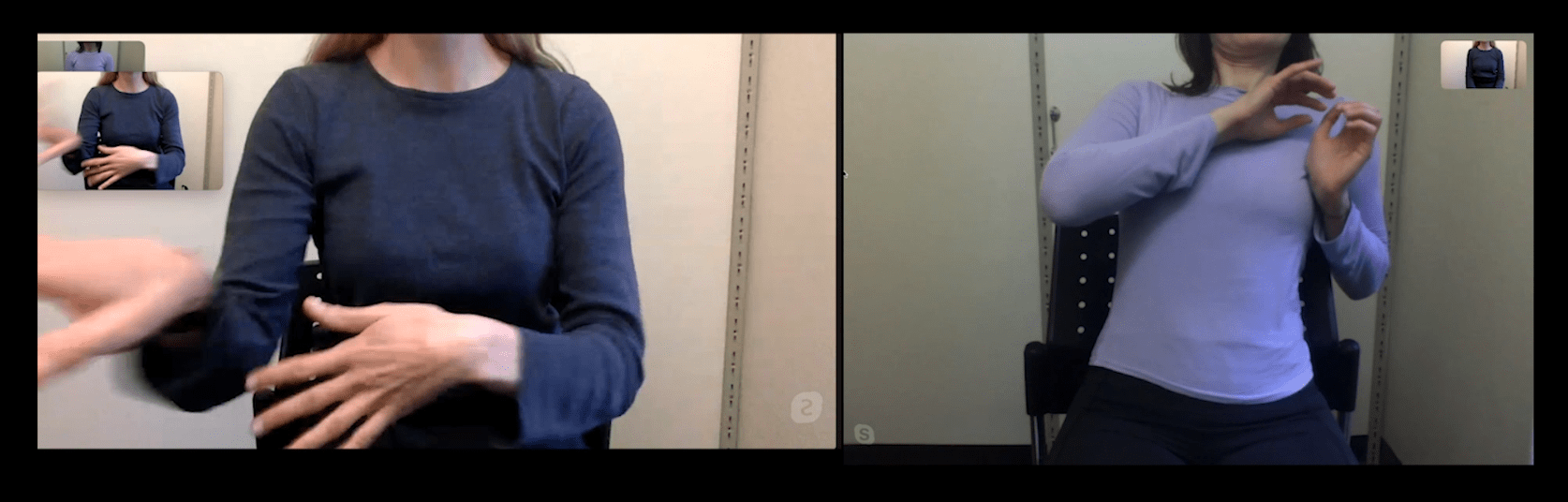

At the end of 2019, Boermans’ recorded and observed ‘on-line communication coupling’ via Whatsapp/Skype, in which participants took part in ‘sign-as-gesture’ conversations, communication through gesture alone.

Aim

The aim was to investigate sequences of gestures (responsive actions evolving over time); gestures repeat an action of progression in space and time, are an empathic relation through movement in space.

Boermans turned to the possibility of using deep learning techniques and gesture recognition to facilitate gesture exchanges between participants or even between participants and a computer. In collaboration with CeR, a system for recognizing gestures using deep learning was developed, capable of successfully recognizing over 30 gestures. It remains to future work to develop a system that can respond to human input with computer generated gestures.

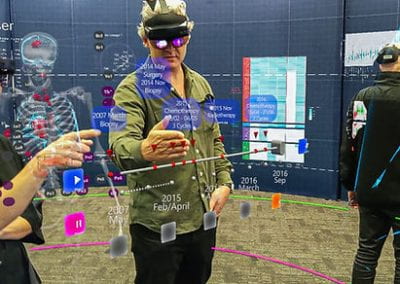

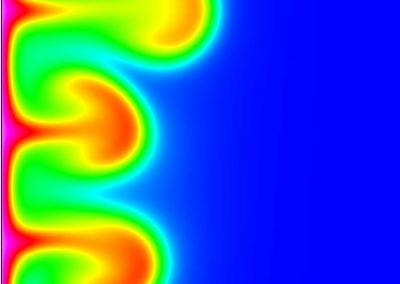

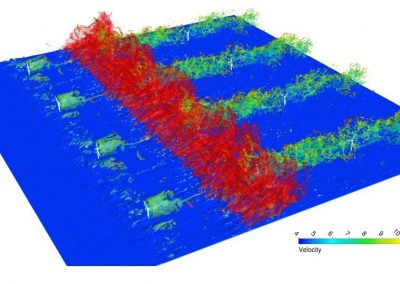

Figure 1 – One of the original ‘gesture conversations’ between two participants. “A language of gesture develops from the desire to communicate. The language is activated via digital interaction – it is in motion, not static, it is unique to that activated moment. The nature of the gesture is dependant on the interactant; there are no concrete rules. Gestures communicated do not have to be learned or remembered, they evolve; they may change upon each interaction in time. They are embodied responses to each digital interaction.” – participant information sheet.

Training data – curation and feature selection

Deep learning is all about data – without data, no models can be trained. Deep learning uses a set of example input/output pairs to learn the relationship between the input and output, thereby learning to predict the correct output for a given input. In this case, the dataset consists of leap motion capture data for various gestures, and labels saying what gesture was being performed.

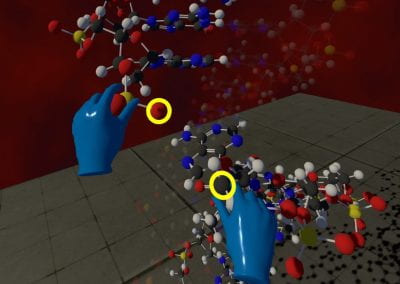

The leap motion capture device outputs raw data about the locations of different parts of the hands. This information is incredibly detailed, containing positional information in three axes (down/up, forwards/backwards, left/right) for many parts of the hands, such as the positions of each fingertip, the positions of each bone in each finger, the centre of each palm, and the direction each hand is pointing in. In fact, this information is a bit too detailed, and using this information in its raw form as training data risks confusing our models by providing too much low-level information.

Live prediction & ‘affects’

One of the main goals was to achieve live prediction – that is, someone performs a gesture over a leap motion sensor, and the gesture is recognized real time. This was achieved by constantly feeding in the last second or so worth of live data into the model and updating a visual interface accordingly.

In addition to predicting what gesture was being performed, Lucy was also interested in exploring the ‘affective dimension’ of gestures – the same gesture may be performed smoothly, roughly, angrily, or happily etc. This was implemented with two metrics:

- Movement: How fast are the hands and fingers moving?

- Angularity: How jerky are gestures?

These metrics, which measure the rate of change of position and speed, respectively, were chosen because they are independent of context – more emotive/abstract affective dimensions such as humour or anger are very context dependent and therefore more difficult to predict.

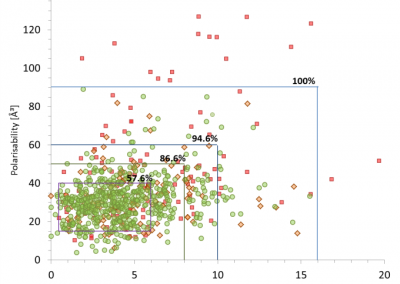

The data was filtered for variables that were informative for gesture recognition. This consisted of testing different variables with models, to see which variables enabled a model to perform well. It also proved helpful to manually calculate new abstract features, such as using fingertip positions to calculate their distances from the plane that the palm of the hand lies on, or their distances to the palm of the hand. Arriving at these variables manually, before training a model, frees a model from needing to learn these abstractions on its own from the training data.

There is no dataset of leap motion capture data with associated gesture labels freely available online, so all data was recorded by various volunteers in-house at CeR. Recruiting a diverse group of people to record training data is important – otherwise, the model may only work with the hands that it has been trained with!

Performance

More than 95% accuracy was achieved across a library of over thirty gestures. Gestures included common ones like waving, thumbs up, and counting, and more abstract gestures such as ‘smoothing sand’, ‘love heart’, and ‘robotic arm’.

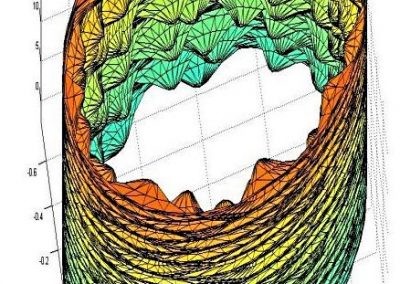

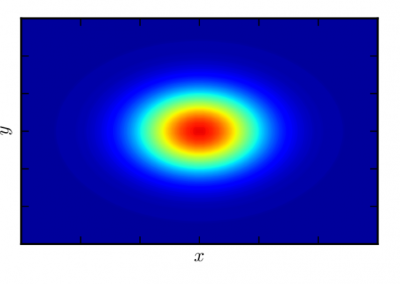

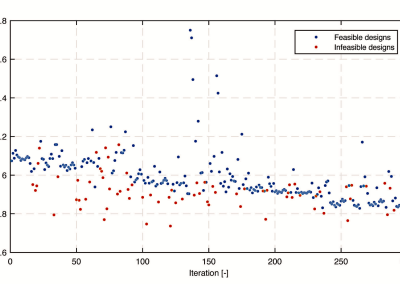

Live plotting

A live plotting tool was developed to plot gesture predictions, confidence levels of predictions, and movement and angularity. The smoothness of this plotting proved highly dependent on the processing power of the machine being used, so a simple interface was developed with controls to change the frequency of prediction and the frame rate of graph updates, along with some control over how the plotted metrics were calculated.

Next steps

The project as it currently stands is a proof of concept, showing that gesture recognition with the leap motion device is feasible. Future work will aim to develop this concept further to facilitate gesture conversations with a computer, or with participants who are unable to see one another, as part of an arts installation.

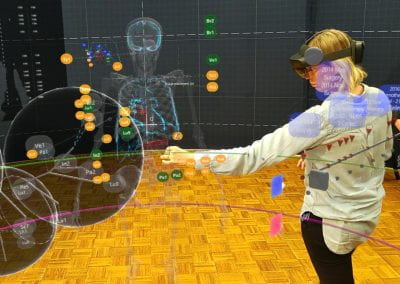

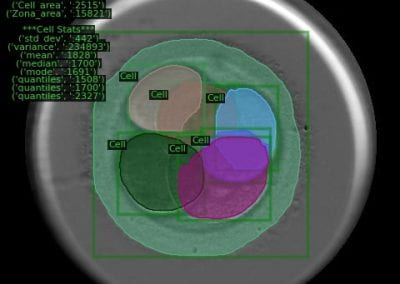

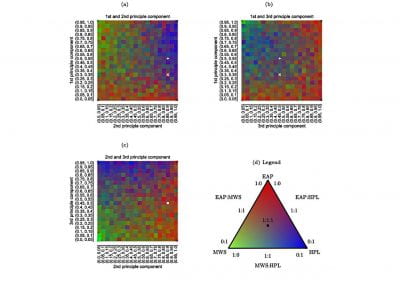

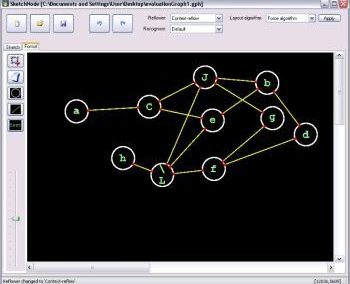

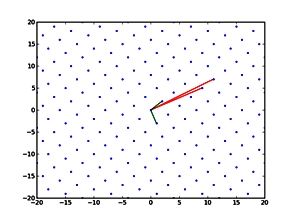

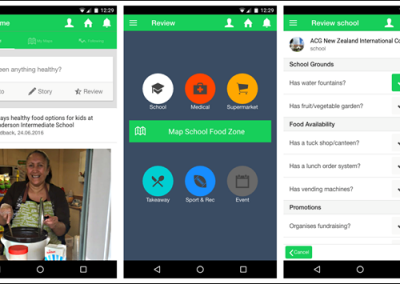

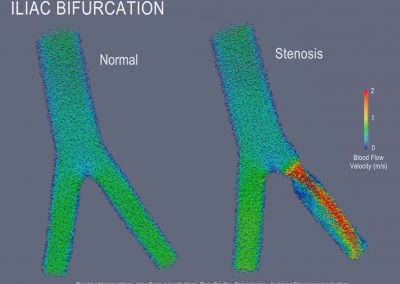

Figure 2 – The live prediction interface. Top left: control interface with icon and text showing current prediction, and text coloured red according to levels of movement and angularity. Bottom left: The interface provided by leap motion for viewing the positions of detected body parts. Right: Live plotting with green line showing prediction confidence, orange line showing angularity, and blue line showing movement.

See more case study projects

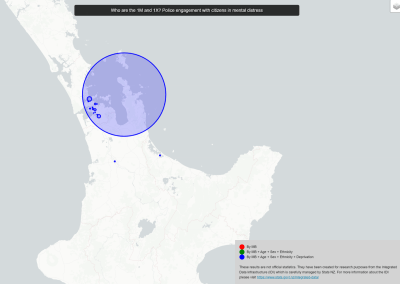

Our Voices: using innovative techniques to collect, analyse and amplify the lived experiences of young people in Aotearoa

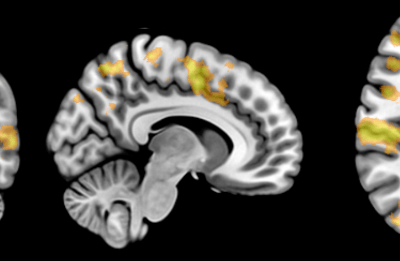

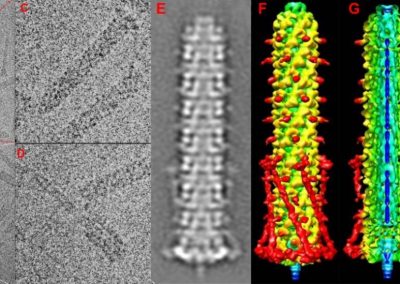

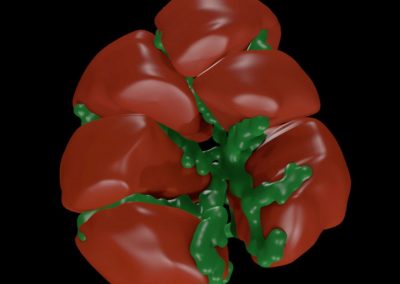

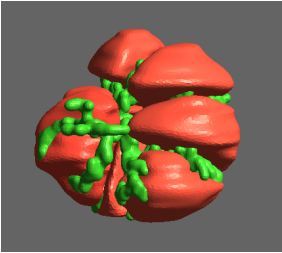

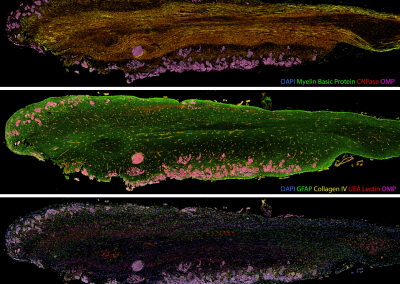

Painting the brain: multiplexed tissue labelling of human brain tissue to facilitate discoveries in neuroanatomy

Detecting anomalous matches in professional sports: a novel approach using advanced anomaly detection techniques

Benefits of linking routine medical records to the GUiNZ longitudinal birth cohort: Childhood injury predictors

Using a virtual machine-based machine learning algorithm to obtain comprehensive behavioural information in an in vivo Alzheimer’s disease model

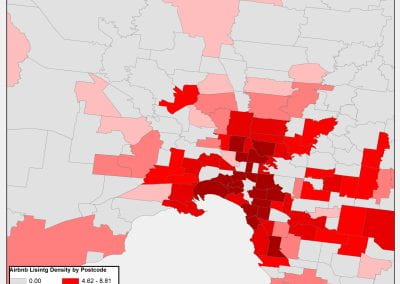

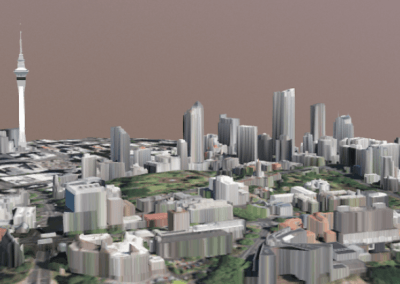

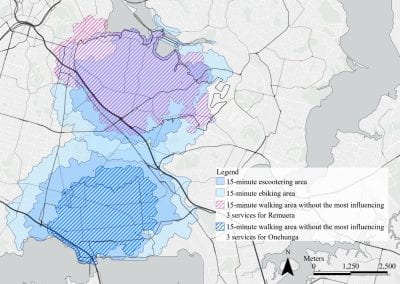

Mapping livability: the “15-minute city” concept for car-dependent districts in Auckland, New Zealand

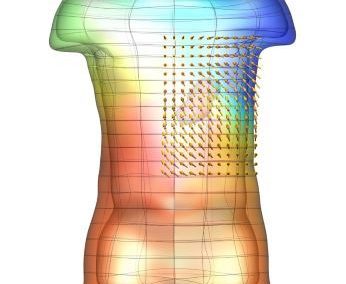

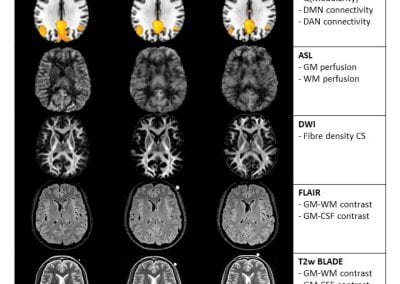

Travelling Heads – Measuring Reproducibility and Repeatability of Magnetic Resonance Imaging in Dementia

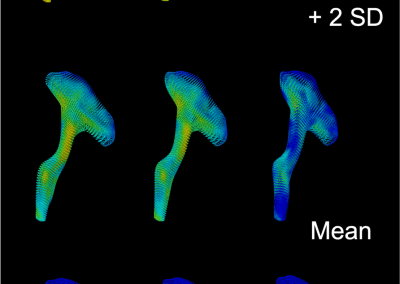

Novel Subject-Specific Method of Visualising Group Differences from Multiple DTI Metrics without Averaging

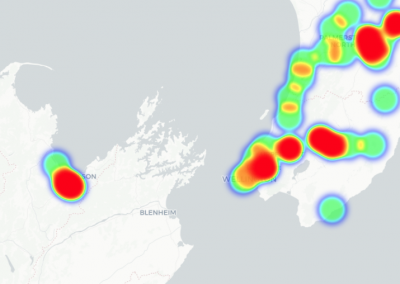

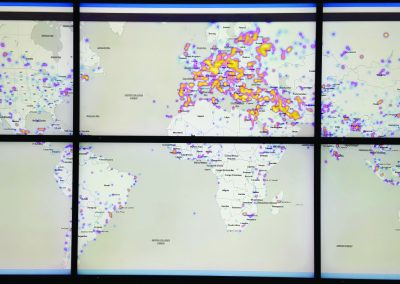

Re-assess urban spaces under COVID-19 impact: sensing Auckland social ‘hotspots’ with mobile location data

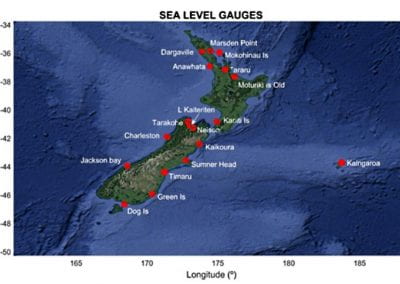

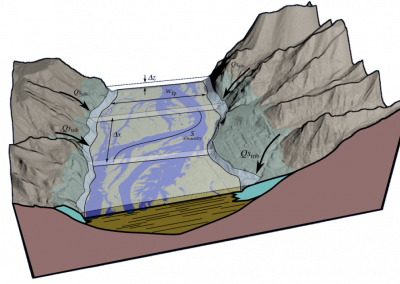

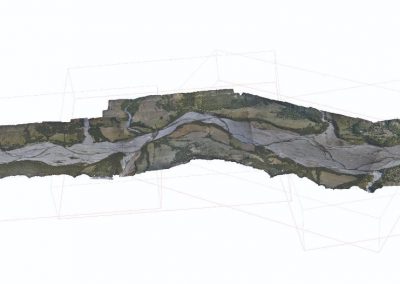

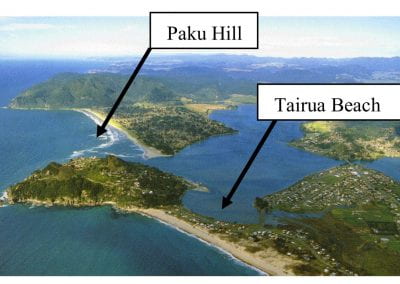

Aotearoa New Zealand’s changing coastline – Resilience to Nature’s Challenges (National Science Challenge)

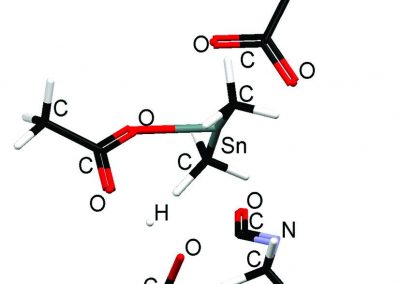

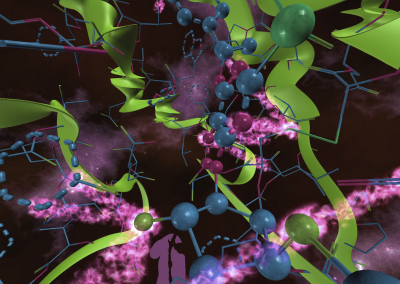

Proteins under a computational microscope: designing in-silico strategies to understand and develop molecular functionalities in Life Sciences and Engineering

Coastal image classification and nalysis based on convolutional neural betworks and pattern recognition

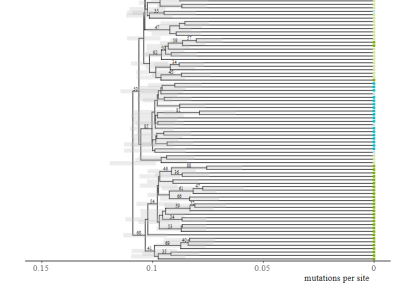

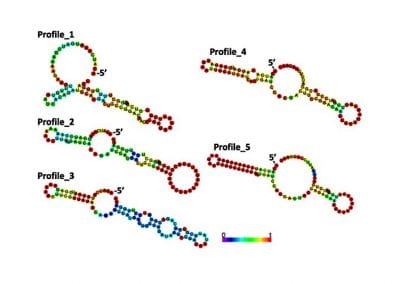

Determinants of translation efficiency in the evolutionarily-divergent protist Trichomonas vaginalis

Measuring impact of entrepreneurship activities on students’ mindset, capabilities and entrepreneurial intentions

Using Zebra Finch data and deep learning classification to identify individual bird calls from audio recordings

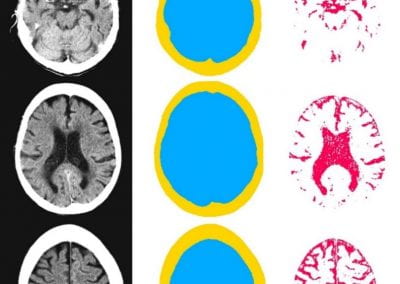

Automated measurement of intracranial cerebrospinal fluid volume and outcome after endovascular thrombectomy for ischemic stroke

Using simple models to explore complex dynamics: A case study of macomona liliana (wedge-shell) and nutrient variations

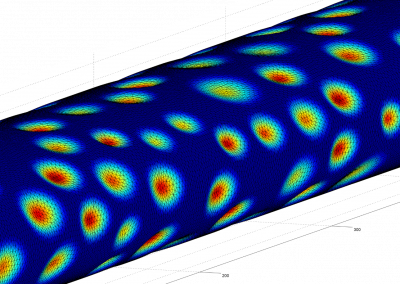

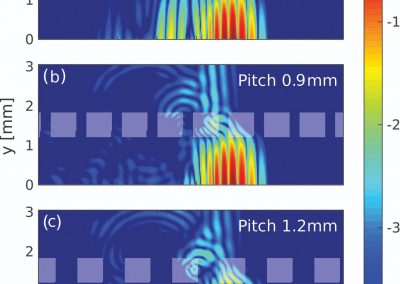

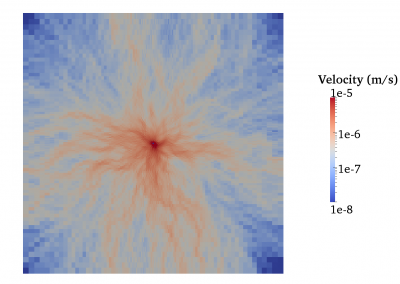

Fully coupled thermo-hydro-mechanical modelling of permeability enhancement by the finite element method

Modelling dual reflux pressure swing adsorption (DR-PSA) units for gas separation in natural gas processing

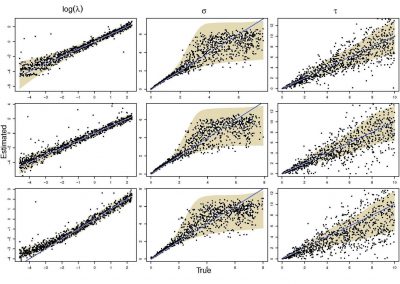

Molecular phylogenetics uses genetic data to reconstruct the evolutionary history of individuals, populations or species

Wandering around the molecular landscape: embracing virtual reality as a research showcasing outreach and teaching tool