Extended reality is turning cancer research into a team sport

Tamsin J Robb1, Yinan Liu2, Braden Woodhouse3, Charlotta Windahl4, Daniel Hurley1, Grant McArthur5,6,7, Stephen B Fox5,7, Lisa Brown5,7,8, Parry Guilford9, Alice Minhinnick3,10, Christopher Jackson9, Cherie Blenkiron1,11, Kate Parker3, Rose McColl12, Nick Young12, Veronica Boyle3, Laird Cameron10, Sanjeev Deva10, Jane Reeve13, Cristin G Print1, Michael Davis2, Uwe Rieger2, Ben Lawrence3,10

1 Molecular Medicine and Pathology, Faculty of Medical and Health Sciences, University of Auckland, New Zealand

2 School of Architecture and Planning, University of Auckland, Auckland, New Zealand

3 Discipline of Oncology, Faculty of Medical and Health Sciences, University of Auckland, New Zealand

4 Business School, University of Auckland, New Zealand

5 University of Melbourne, Melbourne, VIC, Australia

6 Victorian Comprehensive Cancer Centre Alliance, Melbourne, VIC, Australia

7 Peter MacCallum Cancer Centre, Melbourne, VIC, Australia

8 The Royal Melbourne Hospital, Melbourne, VIC, Australia

9 University of Otago, Dunedin, New Zealand

10 Auckland City Hospital, Auckland, New Zealand

11 Auckland Cancer Society Research Centre, University of Auckland, New Zealand

12 Centre for eResearch, University of Auckland, New Zealand

13 Radiology Auckland, Te Toku Tumai, Auckland, New Zealand

The three-dimensional representation of patient data across time and space

From patient communication to the vision of a digital tumour board: the XR Tumour Evolution Project (XRTEP) is a unique, real-world application of design in cancer research. It is enabled by a rare inter-disciplinary collaboration between architects,cancer clinicians, scientists, and technologists. How did the project emerge? And what can it teach us about the future of cancer research?

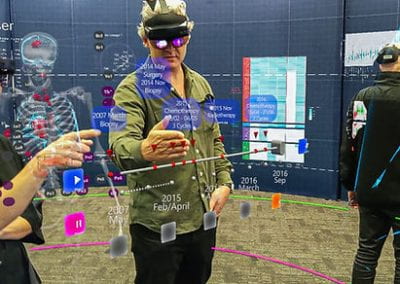

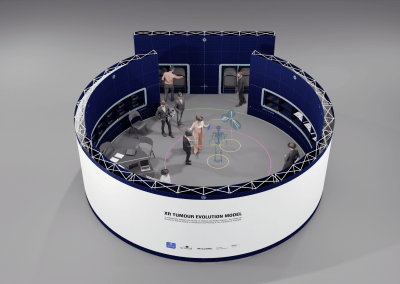

In an arena walled by white panels people gather. They move around, brush their hands through the air and point at things invisible for those outside. Most of them are wearing extended reality headsets, some are looking at tablets. All of them took the chance to experience a new dimension of oncology research at this year’s Ars Electronica in Linz: the XR Tumour Evolution Project (XRTEP) from Auckland, New Zealand, was part of the annual exhibition and opened its doors to those interested.

We talked to four researchers involved in the project: Dr. Ben Lawrence, medical oncologist and cancer genomics researcher, Dr.Tamsin Robb, cancer genomics researcher, Uwe Rieger, lead technical team XR design, and Yinan Liu, XR design. Present in spirit was the patient whose donation made the XRTEP possible.

How did the XR tumour evolution project come into existence?

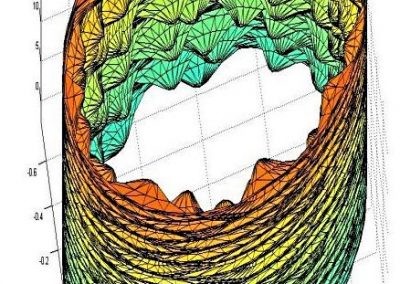

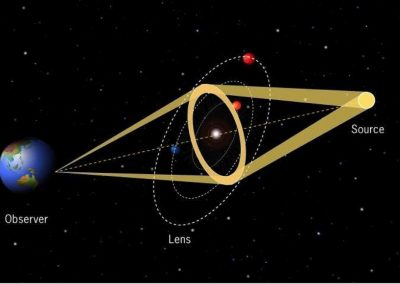

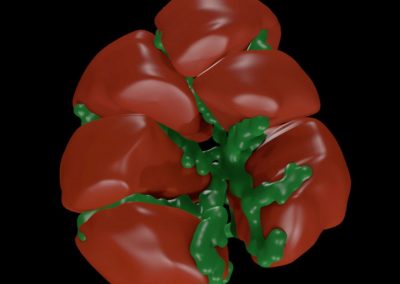

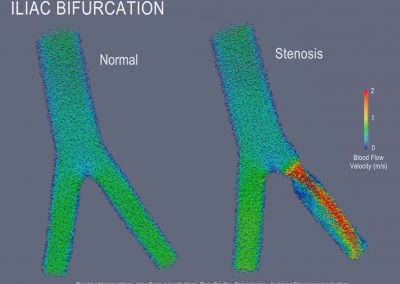

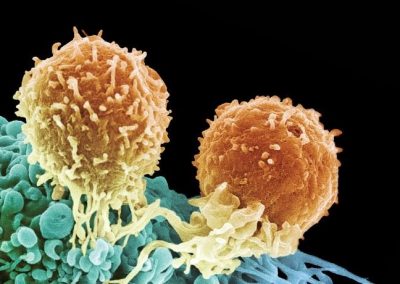

Ben Lawrence: I’ll start with the clinical point of view. As cancer moves around the body, it changes. Those changes affect the way cancer responds to treatment. Some treatments work well in one part of the cancer, but won’t work in another part. We know now that the cause for this is a change in the genomic profile of the cancer in different locations.

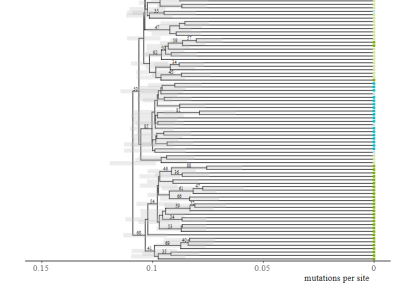

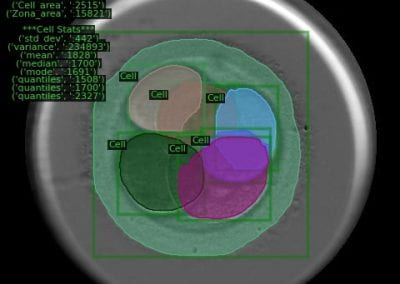

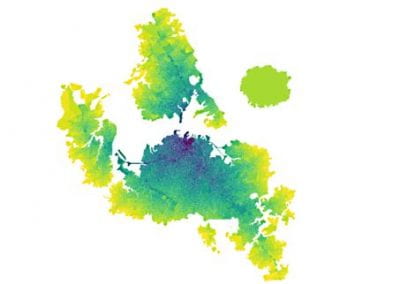

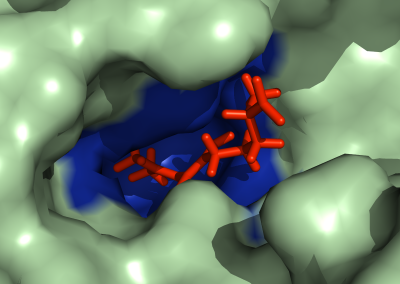

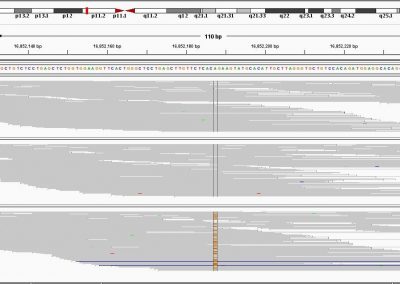

In our project, we had an opportunity to sequence multiple different metastases from within a single person with cancer and define that variation to understand how the cancer developed. When we got this initial data, it was lots of gene sequence information that was too complicated for us to understand. We needed a way to visualise this data so that we could get our heads around it.

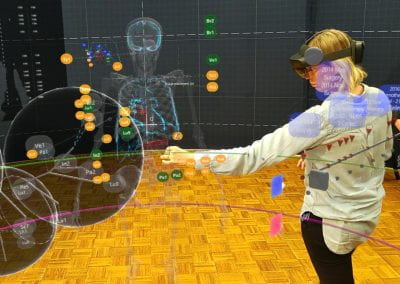

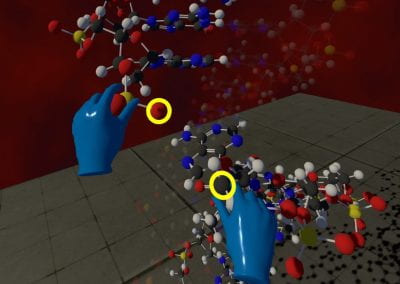

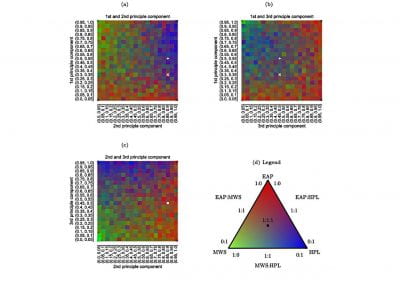

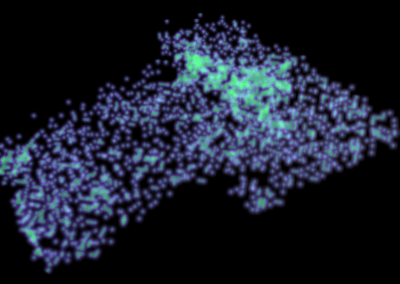

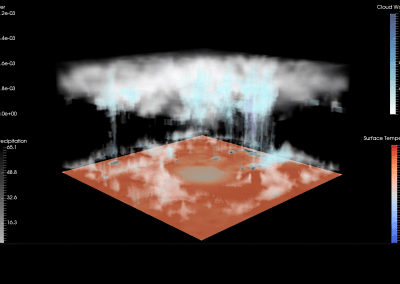

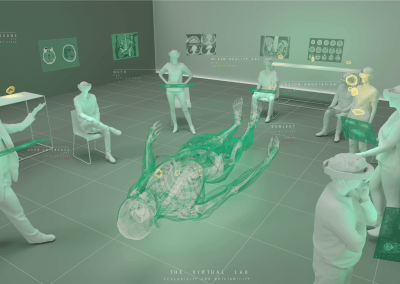

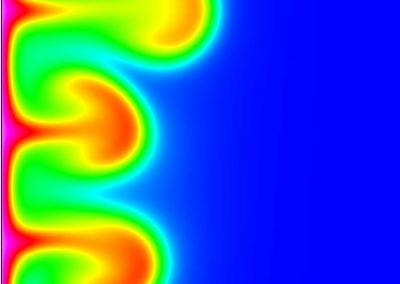

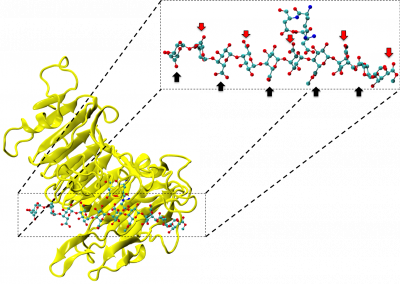

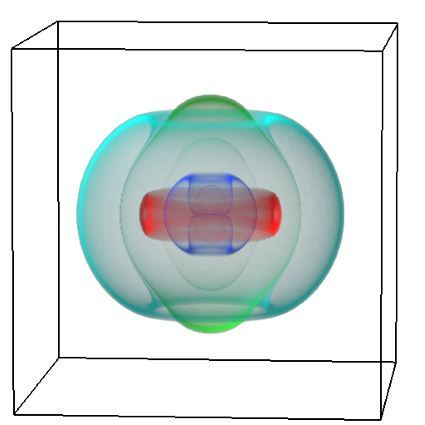

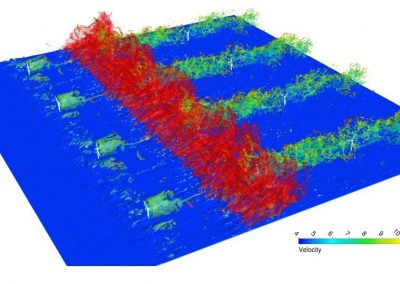

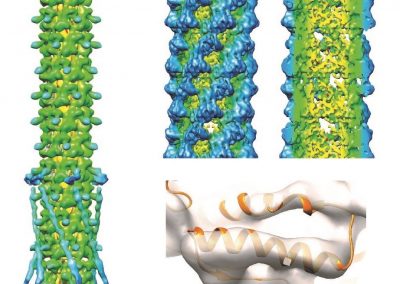

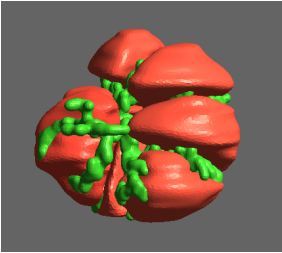

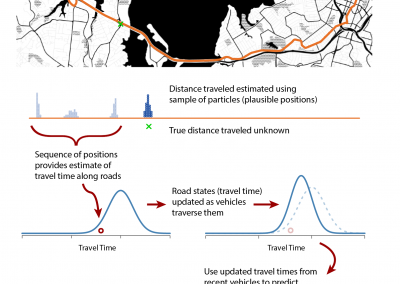

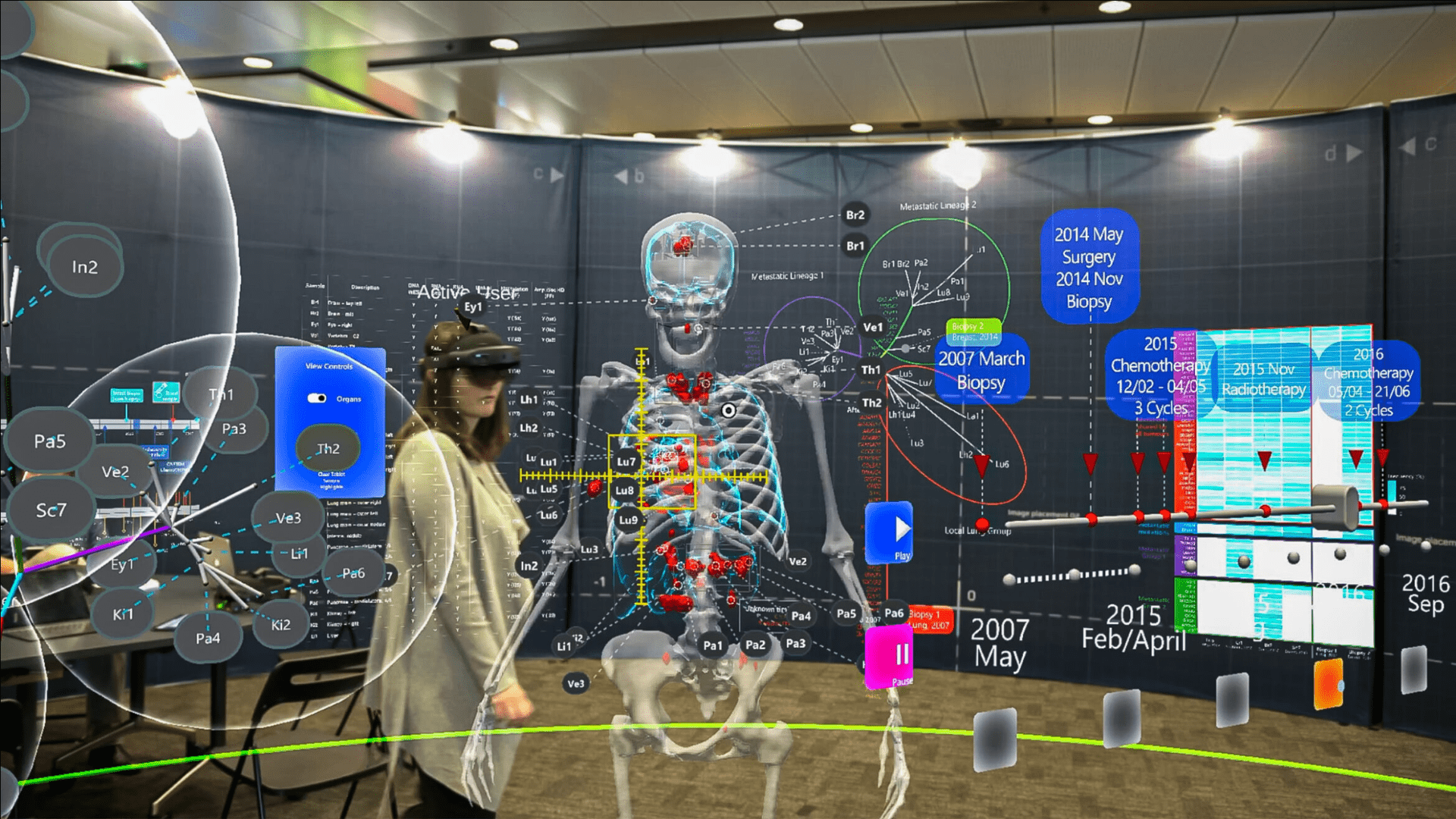

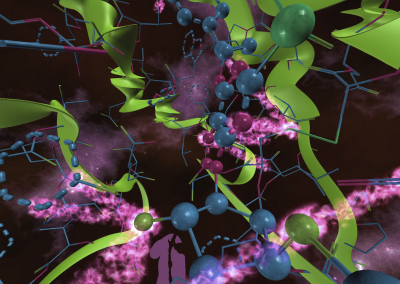

Tamsin Robb: So what we created was a way to bring together this information, including the aspect of time. The model is based on clinical CT scan data from across ten years of a patient’s clinical journey, represented three-dimensionally. Their genomic information is overlayed on their body model at the precise positioning of the biopsy site that it relates to. In this way, we can interpret the genomic data in light of the clinical information. We are working at the intersection of various disciplines: mathematics, biology, pathology, radiology, oncology, and more. Extended reality is turning cancer research into a team sport.

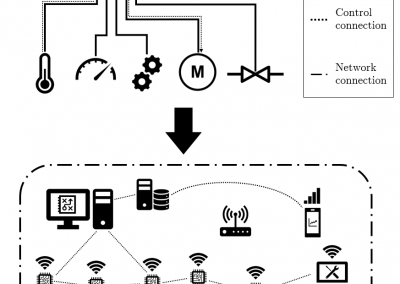

Uwe Rieger: Ben Lawrence was the initiator of this project. He contacted the architecture school, where I am running a research laboratory which is called the “arc/sec Lab for Cyber-Physical Design”. We are looking into cyber-physical design. Our specialty is to develop new architectural, functional spaces by combining digital worlds with physical, traditional architecture. What I want to underline is that what we have done in the XRTEP goes beyond what is typically perceived as being augmented or mixed reality, combining digital and physical tools, we have designed a new form of workspace for up to ten cancer researchers.

We have an ongoing relationship with the patient’s family

The space allows the researchers to operate in a collaborative mode to explore cancer through all types of media. The application can also be linked up to tablets, white boards and a large screen. We created a new type of laboratory, an optimisedprivate arena, which interlinks cancer, space and time.

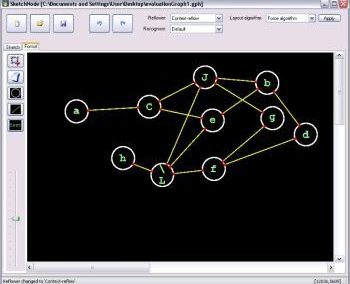

Yinan Liu: I was mainly working on the user interaction and the front end, but also helped to develop a stable back end. Both were needed in order to have a working tool for the researchers and oncologists. Interestingly, we weren’t primarily looking at it as avisualisation. The aspect of collaboration had priority. What would people need in order to complete their tasks? What do we need to consider in order to build a hybrid work space for them? We also had to design within the limitations of the HoloLens headset. Our main goal was to enable the scientists to gain aquick understanding of the presented information in the most basic form.

“Collaboration” was one of the main themes

Ben Lawrence: The knowledge needed to understand the way cancer spreads is not held by one person, one researcher or onedoctor. The brains of multiple different people are needed. The architects created a space in which those knowledge holders could come together, look at the same data at the same time and share their ideas. But this project was not only a collaboration between researchers, it was also a project in close collaboration with the patient andtheir family. The patient recognised the opportunity while they were alive and encouraged us to set up this project with data from theirancer after they died. Pathologists were able to remove samples of these cancers that could then be sequenced.

It is an exploding area of activity

How many hours of coding, planning and cooperative work went into the project?

Where do you see the project in five years?

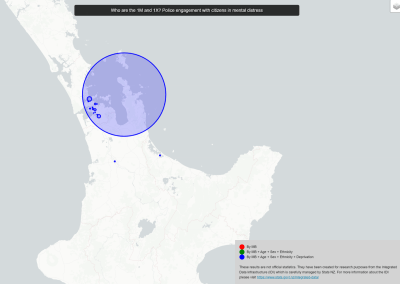

Ben Lawrence: There are a few ways this project could continue on. One of the clinical opportunities lies in patient communication. When we are with patients and we explain to them where their cancer is in their bodies, with extended reality we will have a way to also show them, and to visualise whether and how the cancer has responded to treatment. We will be able to better explain where their pain comes from or which passages are blocked by tumours, to let them see which parts of their tumours have shrunk and which have grown.

Tamsin Robb: Absolutely. Patients are undergoing such an emotionally demanding journey – if extended reality could help them to understand their medical information, that may be particularly valuable. What I hope that we have also done is created a blueprint for what some clinical decision-making tools could look like in the future. This endeavour would be a substantial second, separate project. The natural clinical scenario where this vision fits is themolecular tumour board where you would typically have medical experts from a range of disciplines coming together to discuss a patient’s case. These experts try to make clinical decisions with all of the clinical information right in front of them.

With extended reality, they can be guided by visualised molecular information, like DNA sequencing of one or more tumours, on top of all of the more traditional technologies that we have to provide information about a patient.

Uwe Rieger: While XRTEP is a prototype that we have been developing for a specific condition, we are currently looking into how we could expand it to the next logical stage. On the one hand, we want to expand this tool in a way that multiple people offsite can participate. Ideally, you don’t have to be locally present to take part. Because that is actually another advantage of the Metaverse technology: we are moving towards being able to share and discuss information three-dimensionally from different locations. You won’t need abstract tools to access and share information. It will be direct and tangible.

On the other hand, there are all types of medical scans used in cancer research. They are all three-dimensional, however, so far they are all represented in two-dimensional forms. So we are looking into different pipelines of how we can bring this information directly into the three-dimensional worlds that we are creating. Some types of medical scans might be rendered in 3D moreaccurately than others.

Yinan Liu: If you look at the development process, whether it’s HoloLens or Magic Leap, it is all moving towards a new era of spatial computing. And if we don’t just look at this technology as an entertainment headset, but if it could become – and I think it will – as popular or as common as mobile phones, it will drive the usability much more forward. And of course, we also know that there’s been other medical investigations ever since the HoloLens came out. Since we can visualise things spatially, a lot has happened. I definitely see that progress in medicine over the next five years.

Tamsin Robb: It is an exploding area of activity. There are a lot of projects underway that aren’t really advertised in the public domain. We have learned about projects for guiding surgery remotely to enable surgery to happen in rural regions where there aren’t specialists available. There are also many medical education tools in the works for training the medical workforce of the future.

It’s just a matter of finding the best opportunities to exploit this technology in the medical domain. We started the project thinking that our concept was really unusual, but we have realised that we have a lot of friends.

Factbox

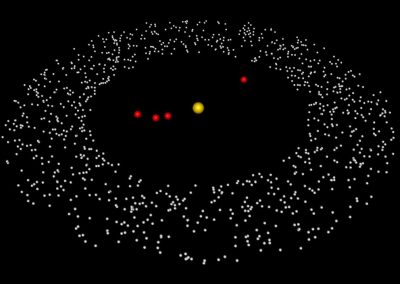

A patient with inoperable cancer donated their tissue for an investigation into the progression of their disease. At the timeof their passing, they had 89 separate tumours. Over half of these were genome-sequenced, yielding precise detail about how cancer had moved through their body.

The volume and complexity of the information obtained posed new challenges in representing data. Also at issue was shifting and strengthening relations between the various siloed disciplines working with that data to contribute to cancer research. The problem was visual, spatial, temporal, and social.

This was a design problem to be pursued in collaboration with spatial practitioners.

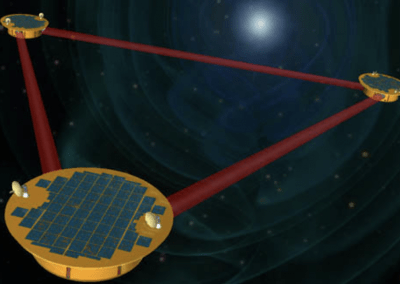

XR Tumour Evolution Project (XRTEP) is an immersive, extended-reality arena, experienced via Microsoft’s HoloLens 2. It draws on front-line architectural research, technology and practice in combining a new mode of human-computer interaction (where physical and digital components are interlinked) with traditional spatial-material practices.

The space is defined by a fabric-skinned, light-weight foldable structure 3 metres high by 8,5 metres in diameter. At its heart stands an interactive holographic model of the patient’s skeleton, organs, and tumours. It is a shared spatial-temporal index. Linked datasets are arranged in three concentric layers around the model. The disciplinary specificity ofthe data increases with distance from the model allowing moments of intra- or inter-disciplinary focus at different points inthe space.

XRTEP brings together cancer experts and furnishes them with tools and data in space to enable real-time collaboration around how and why cancer spreads in the human body. Researchers watch tumours grow, shrink, and spread over time,discern patterns in the data, and connect them to their spatial origins.1,2

This article published in JATROS earlier this year. https://www.universimed.com/at/article/onkologie/extended-reality-244548

Source

1. Designers Institute of New Zealand: Best Design Awards. University of Aucland, School of Architecture and Planning; Faculty of Medical and Health Sciences; and Centre for eRsearch XR Tumour Evolution Project. Online at https://bestawards.co.nz/value-of-design-award/university-of-auckland-school-of-architecture-1/xr-tumour-evolution-project/. Accessed on 22.11.2022

2. Arselectronica 2022: Tumour Evolution in Extended Reality (XR: A collaborative XR tool to examine tumour evolution). Online at https://www.ars.nz/tumour-evolution-in-extended-reality/. Accessed on 22.11.2022

See more case study projects

Our Voices: using innovative techniques to collect, analyse and amplify the lived experiences of young people in Aotearoa

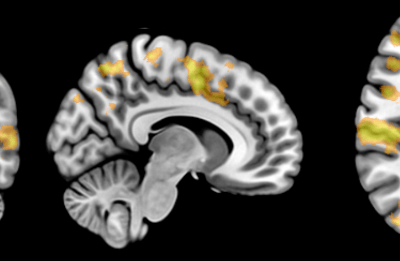

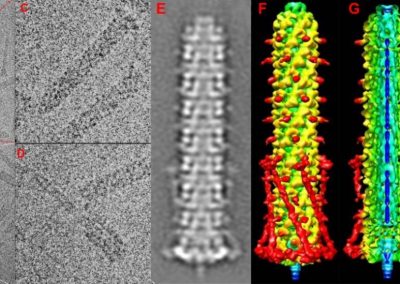

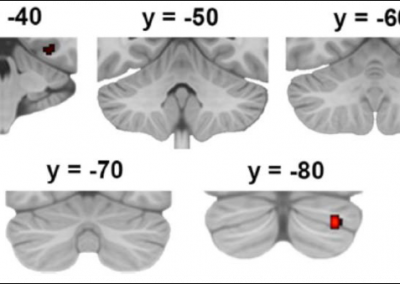

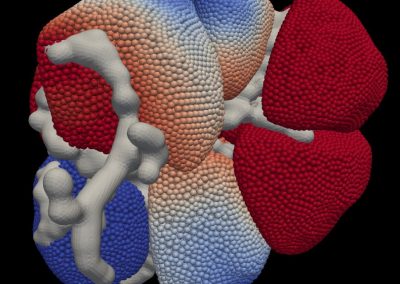

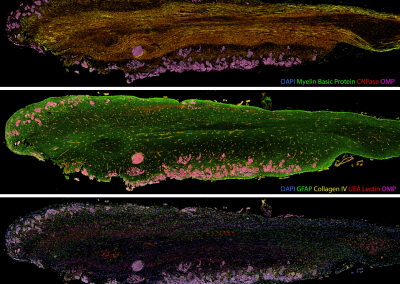

Painting the brain: multiplexed tissue labelling of human brain tissue to facilitate discoveries in neuroanatomy

Detecting anomalous matches in professional sports: a novel approach using advanced anomaly detection techniques

Benefits of linking routine medical records to the GUiNZ longitudinal birth cohort: Childhood injury predictors

Using a virtual machine-based machine learning algorithm to obtain comprehensive behavioural information in an in vivo Alzheimer’s disease model

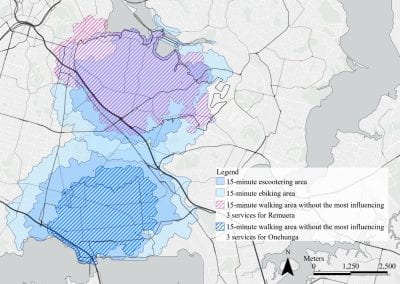

Mapping livability: the “15-minute city” concept for car-dependent districts in Auckland, New Zealand

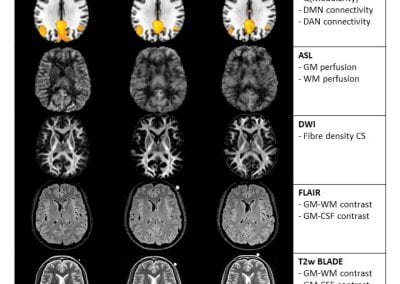

Travelling Heads – Measuring Reproducibility and Repeatability of Magnetic Resonance Imaging in Dementia

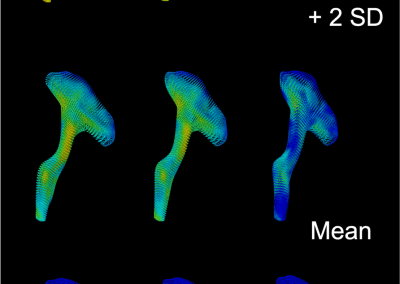

Novel Subject-Specific Method of Visualising Group Differences from Multiple DTI Metrics without Averaging

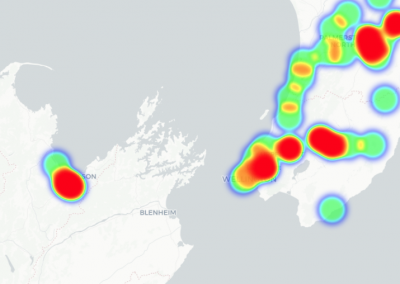

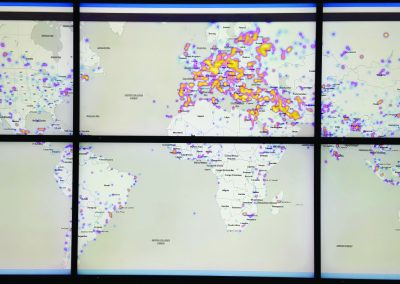

Re-assess urban spaces under COVID-19 impact: sensing Auckland social ‘hotspots’ with mobile location data

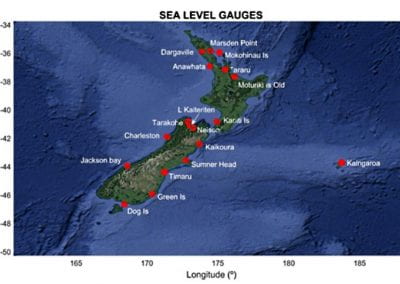

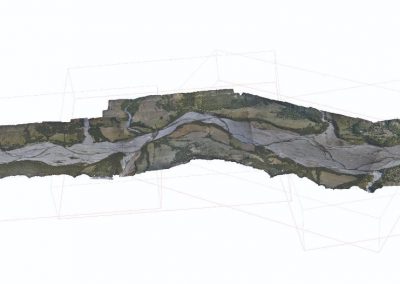

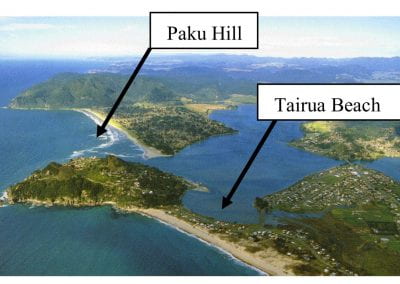

Aotearoa New Zealand’s changing coastline – Resilience to Nature’s Challenges (National Science Challenge)

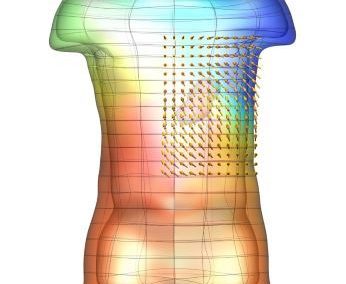

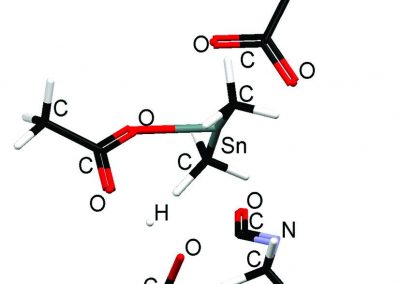

Proteins under a computational microscope: designing in-silico strategies to understand and develop molecular functionalities in Life Sciences and Engineering

Coastal image classification and nalysis based on convolutional neural betworks and pattern recognition

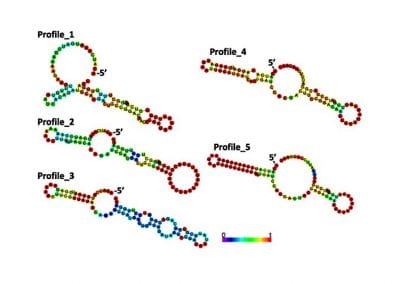

Determinants of translation efficiency in the evolutionarily-divergent protist Trichomonas vaginalis

Measuring impact of entrepreneurship activities on students’ mindset, capabilities and entrepreneurial intentions

Using Zebra Finch data and deep learning classification to identify individual bird calls from audio recordings

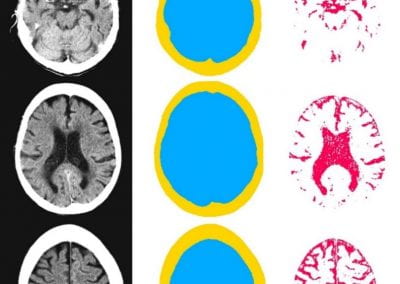

Automated measurement of intracranial cerebrospinal fluid volume and outcome after endovascular thrombectomy for ischemic stroke

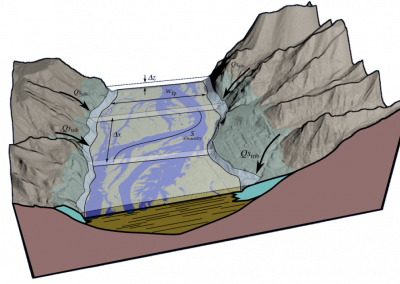

Using simple models to explore complex dynamics: A case study of macomona liliana (wedge-shell) and nutrient variations

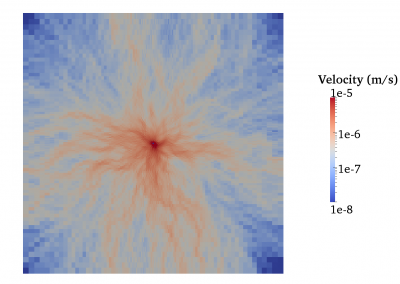

Fully coupled thermo-hydro-mechanical modelling of permeability enhancement by the finite element method

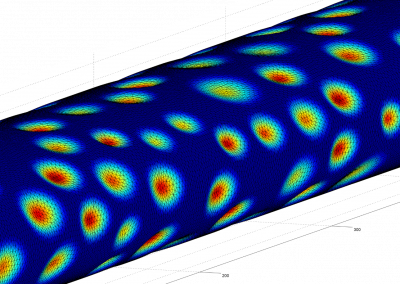

Modelling dual reflux pressure swing adsorption (DR-PSA) units for gas separation in natural gas processing

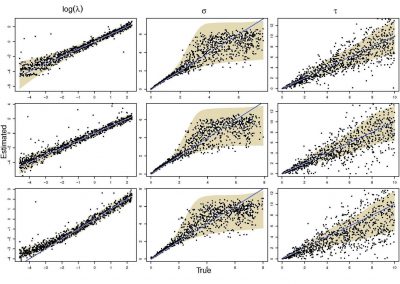

Molecular phylogenetics uses genetic data to reconstruct the evolutionary history of individuals, populations or species

Wandering around the molecular landscape: embracing virtual reality as a research showcasing outreach and teaching tool