VRhook: A Data Collection Tool for VR Motion Sickness Research

Elliott Wen, Research Fellow, Auckland Bioengineering Institute

Introduction

VR gaming has been gaining widespread popularity in recent years, with the annual market revenue projected to reach $84 billion by 2028 [1]. However, up to 40% of users suffer from VR motion sickness with symptoms like fatigue, disorientation, and nausea [2, 3]. These adverse effects can severely undermine the user experience. To inform users about potential motion sickness, VR game stores like Oculus and Steam display a comfort rating for each game. These comfort ratings are determined by human experts who can identify common risk factors inside the application, such as frequent multi-axis rotation, excessive movement speed, and use of wide field of view [4]. However, a significant drawback of this approach is the highly labor-intensive rating process, which does not scale with the growing game industry.

Recently, researchers have proposed the use of machine learning approaches to identify the presence of motion sickness [5, 6]. Despite the progress made, many of these studies pointed out that their models are constrained by the size of training datasets. To improve the model generalization, they need to acquire larger datasets, containing many thousands of video clips of VR experiences and the corresponding risk factors [7]. Unfortunately, conventional data acquisition strategies cannot meet this requirement. Manual annotation by human experts is expensive and does not scale. An alternative is to use custom-made VR games for data collection, however this could create generalizability issues. Finally, modifying existing VR games to extract the data would require source code access, which is practically impossible due to the proprietary nature of the games.

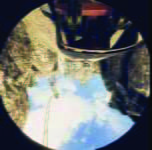

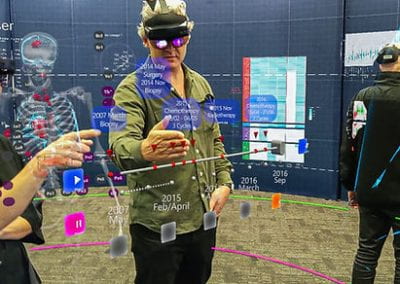

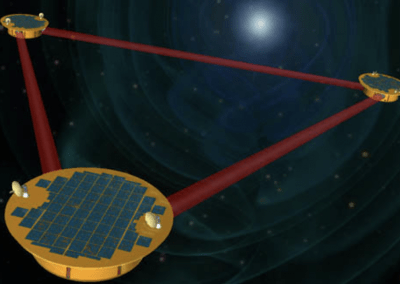

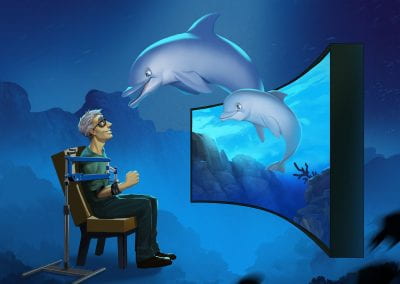

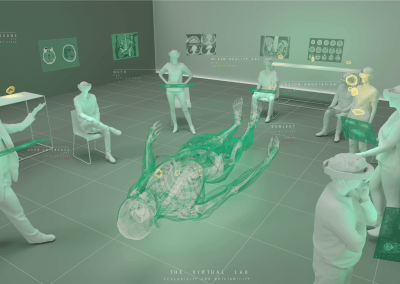

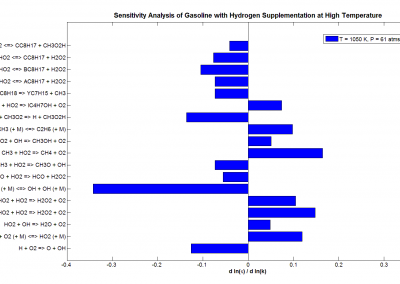

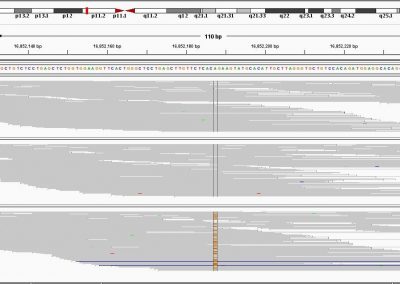

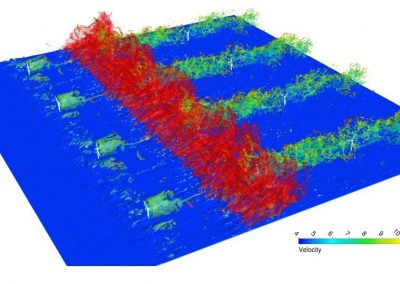

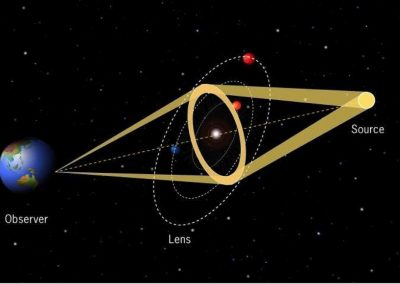

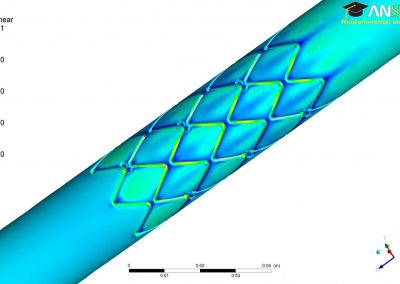

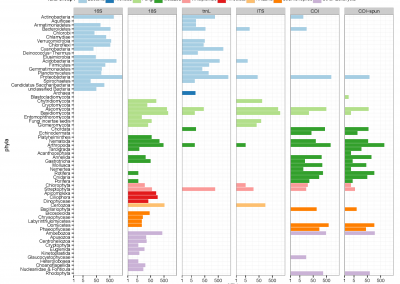

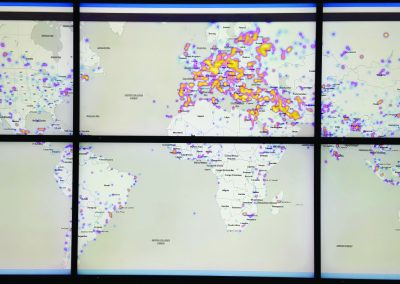

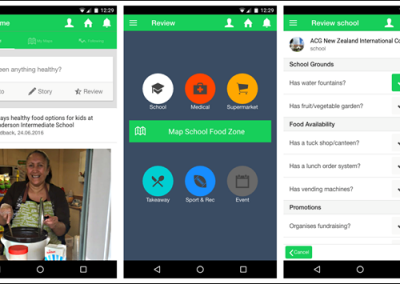

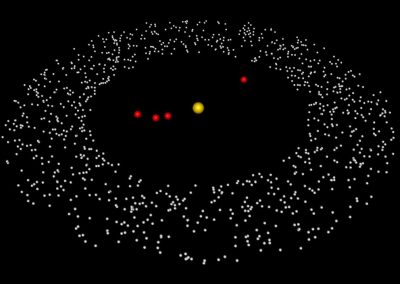

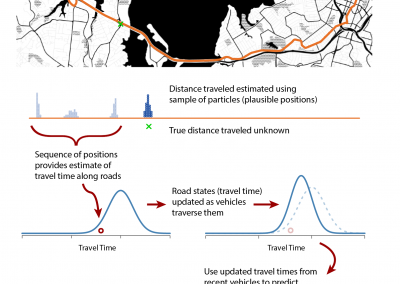

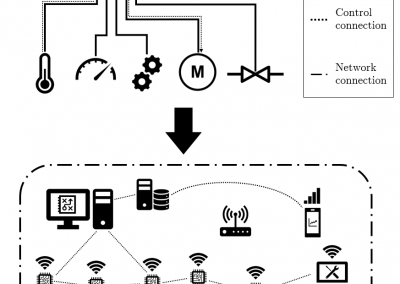

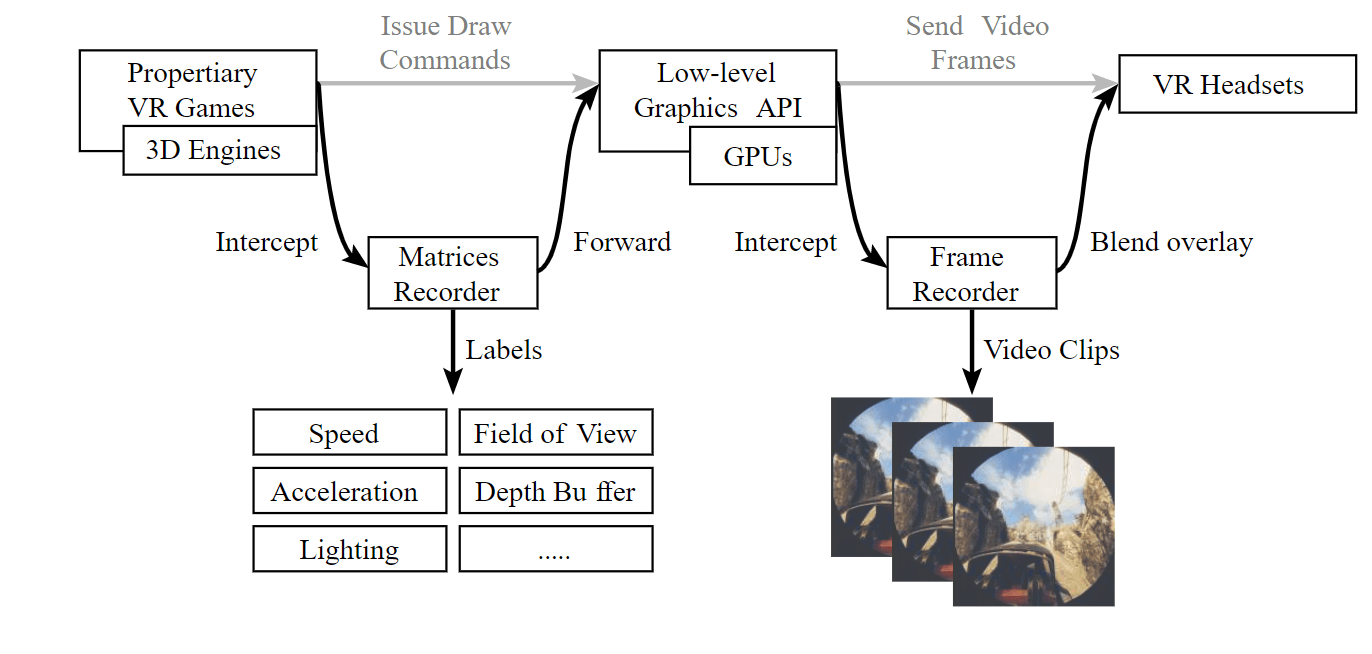

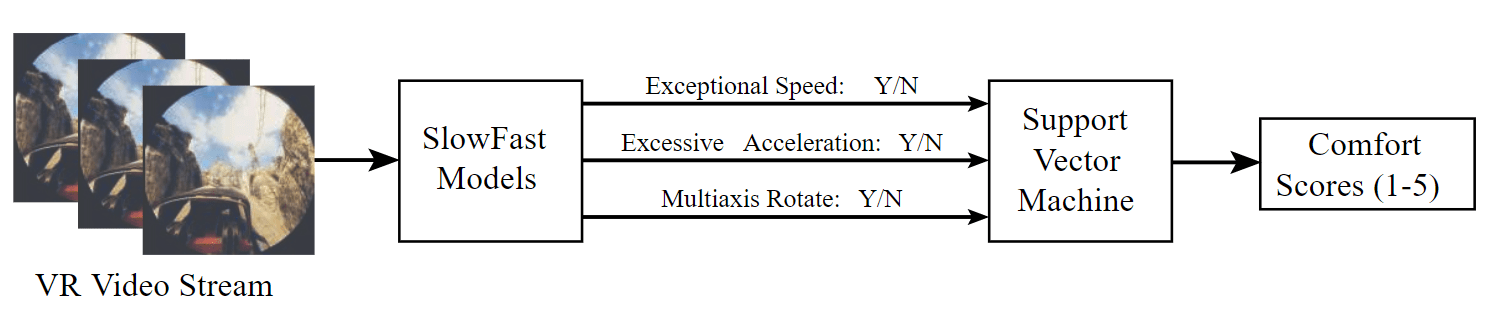

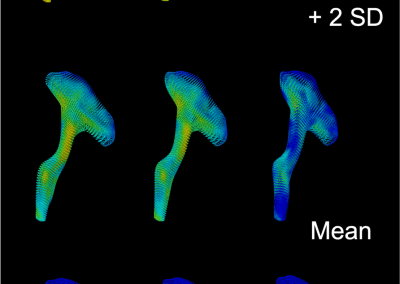

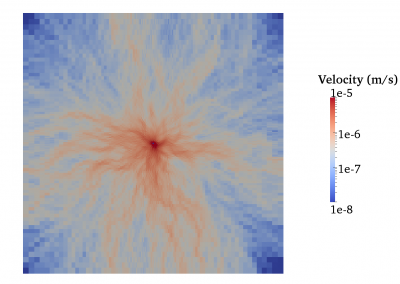

To overcome these challenges, we present a novel data collection tool named VRhook, which can automatically extract labeled data from a wide range of real-world VR games without accessing their source codes (see Figure 1). This is achieved via Dynamic Hooking [8], where we inject custom code into run-time memory to intercept low-level graphics pipeline data, particularly video frames and their associated transformation matrices. In computer graphics, transformation matrices apply motion effects to 3D models and project them into a two dimensional video frame for presentation. The matrices thus can be used to extract many useful labels such as rotation, speed, and acceleration, which have been linked to motion sickness [9, 10]. In addition to data capturing, our tool can inject additional rendering commands to display in-game overlay information. This allows us to incorporate a previously validated dial mechanism. [11] to collect self-reported comfort scores from users. By combining the extracted labels, the self-reported comfort scores, and the video frames, we can construct many motion sickness detection models from prior work. In this way, our tool could stimulate new machine learning based research on VR sickness.

Figure 1: Backbone techniques of our data collection tool

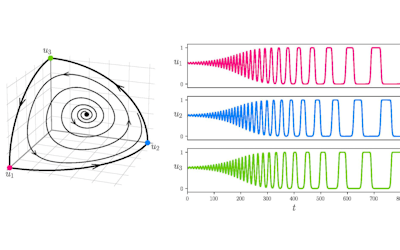

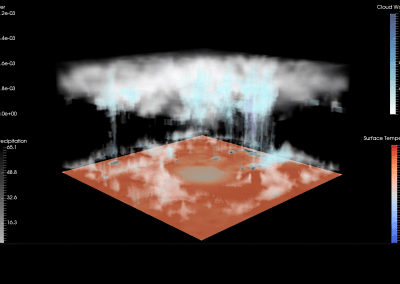

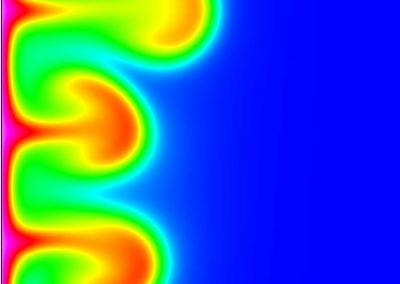

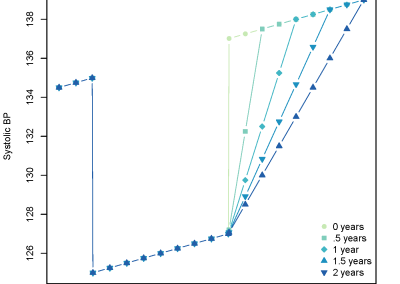

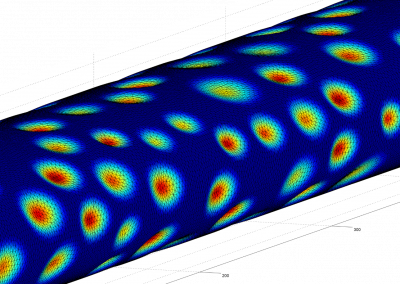

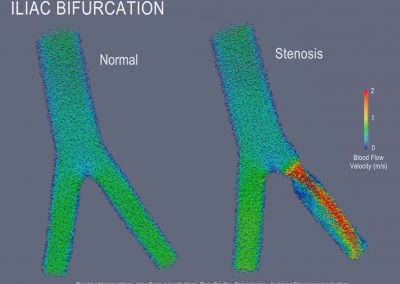

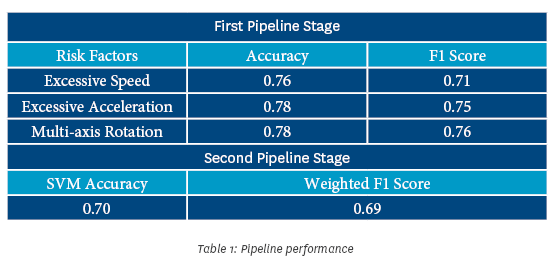

GPU support from Nectar

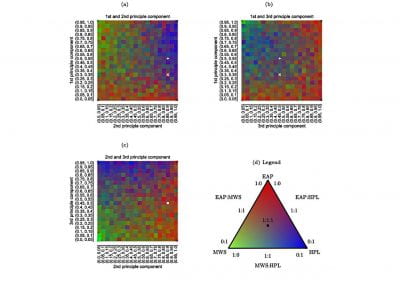

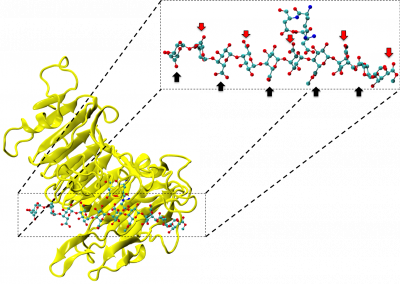

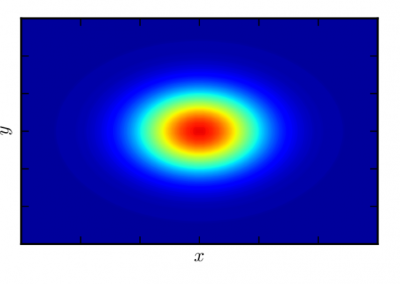

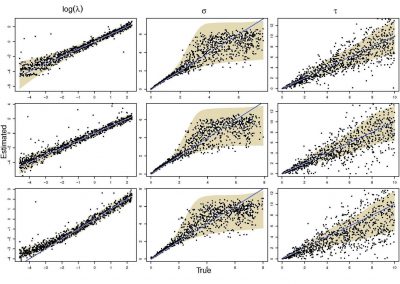

In our work, we implement VRhook on Nectar GPU instances. We automate the execution and generate a dataset from 5 real-world roller coaster games. On average each game lasted for 4 minutes. In total, we gathered approximately 1200 one second video clips. Each clip contained 180 video frames (90 for each eye) at a resolution of 1832 × 960. These frames’ MVP matrices were recorded and analyzed to generate three types of binary labels for each clip, including 1) fast/slow speed, 2) fast / slow acceleration, and 3) multiple-axis rotation detected / not detected. Using this dataset, we further develop a machine learning based pipeline to detect the presence of factors that contribute to motion sickness and predict the comfort score for a given game play video as shown in Figure 2. We used two V100 32 GB GPUs from Nectar cloud to train the model. We set the number of epochs to 5 and the batch size to 32. The learning rate was 1e−4 and the training wall time was 12 hours. The performance of our system is shown in Table 1. We managed to publish this work at The ACM Symposium on User Interface Software and Technology (UIST 2022). We really appreciate valuable technical support from many friendly CeR staff members in the Nectar cloud.

Table 1: Pipeline performance

Figure 2: Machine Learning ppeline run in Nectar GPUs.

References

- “Virtual reality in gaming market size.” [Online]. Avail- able: https://www.fortunebusinessinsights.com/industry-reports/virtual- reality-gaming-market-100271

- S. Martirosov and P. Kopecek, “Cyber sickness in virtual reality-literature review.” Annals of DAAAM & Proceedings, vol. 28, 2017.

- D. M. Johnson, “Introduction to and review of simulator

- E. Chang, H. T. Kim, and B. Yoo, “Virtual reality sickness: a review of causes and measurements,” International Journal of Human–Computer Interaction, vol. 36, no. 17, pp. 1658–1682, 2020.

- H. Oh and W. Son, “Cybersickness and its severity arising from virtual reality content: A comprehensive study,” Sensors, vol. 22, no. 4, p. 1314, 2022.

- S. Hell and V. Argyriou, “Machine learning architectures to predict mo- tion sickness using a virtual reality rollercoaster simulation tool,” in 2018 IEEE International Conference on Artificial Intelligence and Virtual Real- ity (AIVR). IEEE, 2018, pp. 153–156.

- N. Padmanaban, T. Ruban, V. Sitzmann, A. M. Norcia, and G. Wetzstein, “Towards a machine-learning approach for sickness prediction in 360 stereoscopic videos,” IEEE transactions on visualization and computer graphics, Vol. 24, no. 4, pp. 1594–1603, 2018.

- J. Lopez, L. Babun, H. Aksu, and A. S. Uluagac, “A survey on function and system call hooking approaches,” Journal of Hardware and Systems Security, vol. 1, no. 2, pp. 114–136, 2017.

- R. H. So, A. Ho, and W. Lo, “A metric to quantify virtual scene movement for the study of cybersickness: Definition, implementation, and verification,” Presence, vol. 10, no. 2, pp. 193–215, 2001.

- F. Bonato, A. Bubka, S. Palmisano, D. Phillip, and G. Moreno, “Vection change exacerbates simulator sickness in virtual environments,” Presence: Teleoperators and Virtual Enviro ments, vol. 17, no. 3, pp. 283–292, 2008.

- N. McHugh, S. Jung, S. Hoermann, and R. W. Lindeman, “Investigating a physical dial as a measurement tool for cybersickness in virtual reality,” in 25th ACM Symposium on Virtual Reality Software and Technology, 2019, pp. 1–5.

See more case study projects

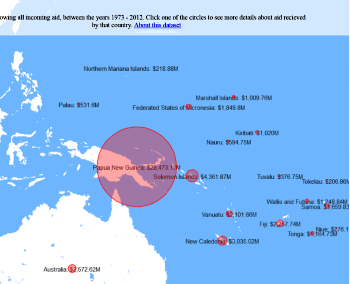

Our Voices: using innovative techniques to collect, analyse and amplify the lived experiences of young people in Aotearoa

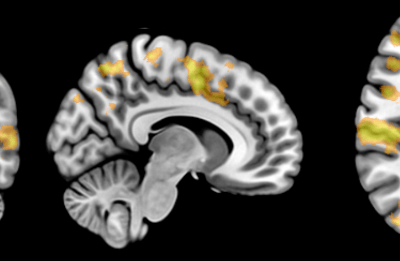

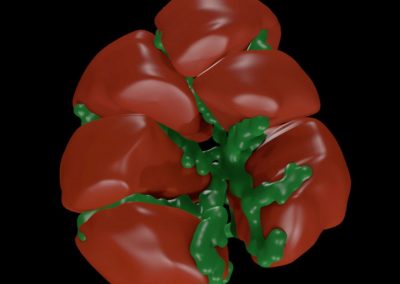

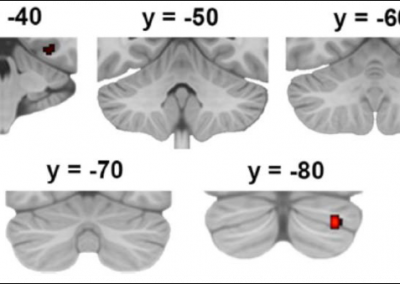

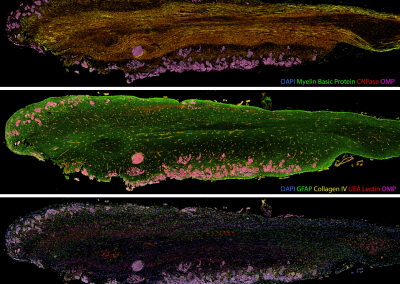

Painting the brain: multiplexed tissue labelling of human brain tissue to facilitate discoveries in neuroanatomy

Detecting anomalous matches in professional sports: a novel approach using advanced anomaly detection techniques

Benefits of linking routine medical records to the GUiNZ longitudinal birth cohort: Childhood injury predictors

Using a virtual machine-based machine learning algorithm to obtain comprehensive behavioural information in an in vivo Alzheimer’s disease model

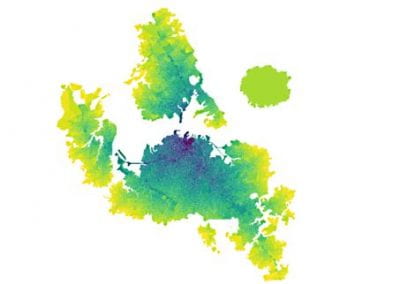

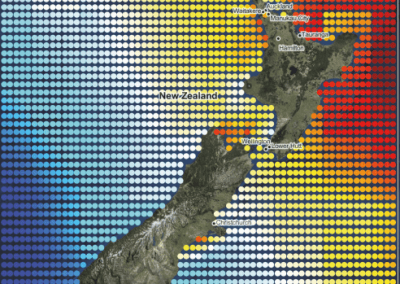

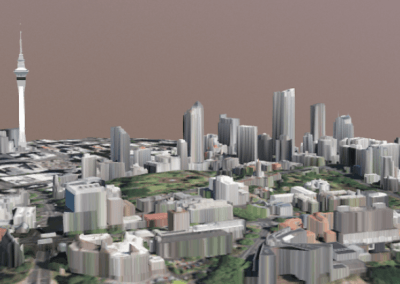

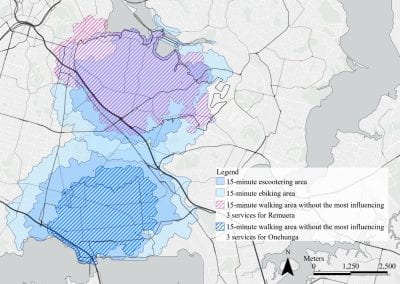

Mapping livability: the “15-minute city” concept for car-dependent districts in Auckland, New Zealand

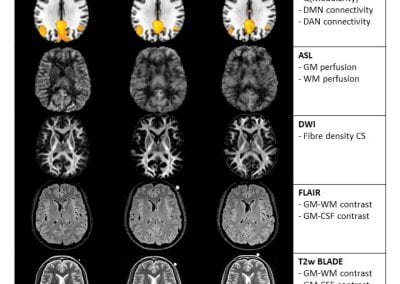

Travelling Heads – Measuring Reproducibility and Repeatability of Magnetic Resonance Imaging in Dementia

Novel Subject-Specific Method of Visualising Group Differences from Multiple DTI Metrics without Averaging

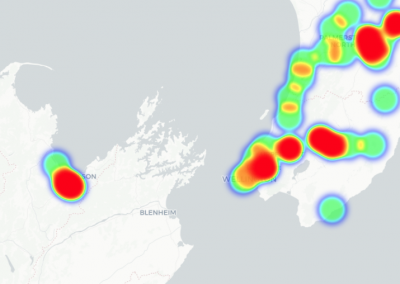

Re-assess urban spaces under COVID-19 impact: sensing Auckland social ‘hotspots’ with mobile location data

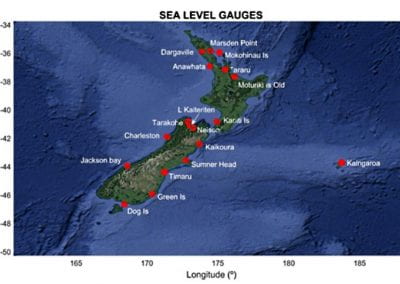

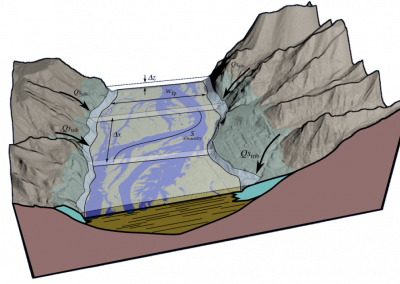

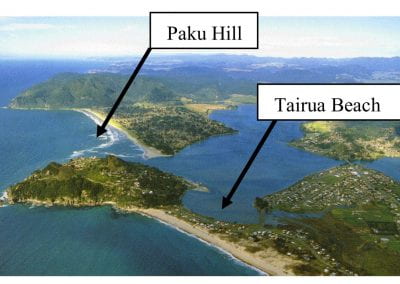

Aotearoa New Zealand’s changing coastline – Resilience to Nature’s Challenges (National Science Challenge)

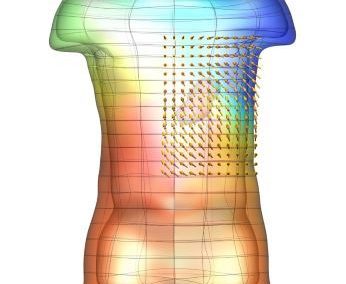

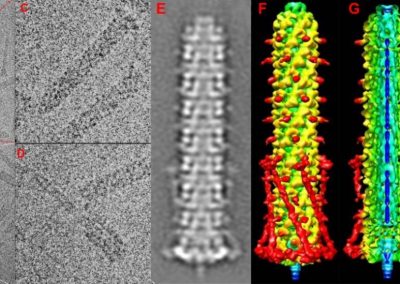

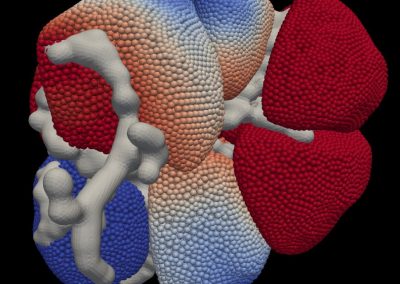

Proteins under a computational microscope: designing in-silico strategies to understand and develop molecular functionalities in Life Sciences and Engineering

Coastal image classification and nalysis based on convolutional neural betworks and pattern recognition

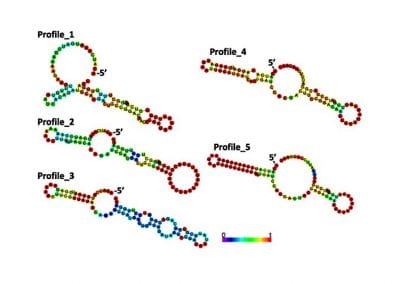

Determinants of translation efficiency in the evolutionarily-divergent protist Trichomonas vaginalis

Measuring impact of entrepreneurship activities on students’ mindset, capabilities and entrepreneurial intentions

Using Zebra Finch data and deep learning classification to identify individual bird calls from audio recordings

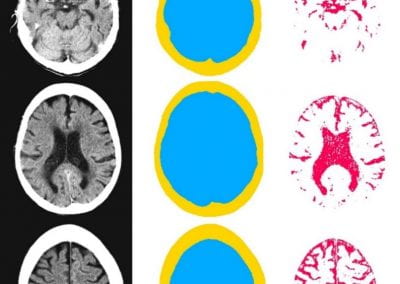

Automated measurement of intracranial cerebrospinal fluid volume and outcome after endovascular thrombectomy for ischemic stroke

Using simple models to explore complex dynamics: A case study of macomona liliana (wedge-shell) and nutrient variations

Fully coupled thermo-hydro-mechanical modelling of permeability enhancement by the finite element method

Modelling dual reflux pressure swing adsorption (DR-PSA) units for gas separation in natural gas processing

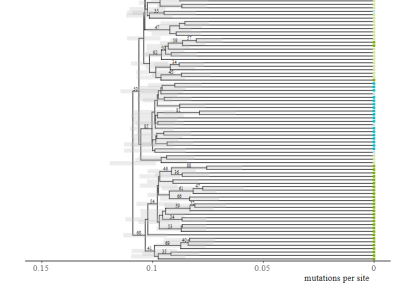

Molecular phylogenetics uses genetic data to reconstruct the evolutionary history of individuals, populations or species

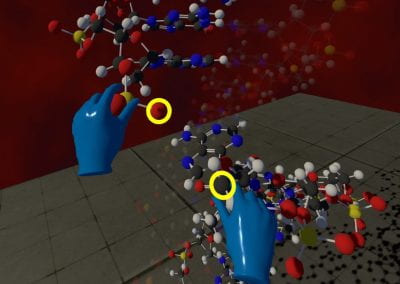

Wandering around the molecular landscape: embracing virtual reality as a research showcasing outreach and teaching tool